Racial bias and #IWantToSeeNyome on Instagram

In July 2020, model and influencer Nyome Nicholas-Williams (@curvynyome) accused Instagram of racial bias (after the platform removed her picture), stirring up the #IWantToSeeNyome campaign that made Instagram change its policy. This article discusses how Instagram, by exercising its power while enforcing norms, excludes fat black women.

Racial bias on Instagram allegations following blocked images

Nyome Nicholas-Williams spoke out against Instagram and its racially-biased community guidelines and policies. She did this in the wake of the Black Lives Matters protests, following George Floyd’s death, and a month after Instagram CEO Adam Mossori publicly committed his company to revise its policies, tools and processes that impact Black people.

On the 29th of July 2020, Nyome Nicholas-Williams, a model and influencer advocating for body positivity and mental health, posted a picture on Instagram celebrating herself with an image from a shoot with experienced photographer Alex Cameron (@alex_cameron). Instagram promptly removed the images for allegedly "going against community guidelines on nudity or sexual activity". The picture showed Nyome sitting on a stool with her hands covering her bare upper body.

Screenshot of post removed from Instagram from a user in an attempt to support the #IWantToSeeNyome campaign.

In the days that followed, Nyome, Alex, and activist Gina Martin (who successfully campaigned to make upskirting illegal in the UK), brought attention to the case with a #IWantToSeeNyome campaign, an article in Harper's Bazaar, and a petition on change.org. The campaign and news coverage led to the publication of a co-signed open-letter to CEO Adam Mossori on the 10th of September to demand a change on adult nudity policy to distinguish holding or covering breasts from squeezing them. Nicholas-Williams also asked for dialogue and transparency from Instagram to fight racial bias on the platform. On the 25th of October 2020, Instagram announced a change in its policy by explicitly forbidding images that show the “squeezing of female breasts”.

This article discusses how Instagram’s power in banning Nyome’s image creates a discourse of racial and body-type exclusion, eliciting a counter-discourse in response that defies Instagram’s customs. It defies the custom of not allowing certain bodies to be seen while accepting others, instead of promoting equality or inclusivity. This discourse analysis aims to make explicit what gets typically taken for granted and articulates what it infers from people’s lives by systemically formed power relations in society at large in line with Cameron (2001). It does so by analysing the conversation that took place on Instagram following the ban of Nyome’s image by initially focusing on Nyome’s, Alex’s, and Gina’s perspective to understand what it infers from them, how the conversation was picked up online and beyond, and how it changed Instagram’s policy on adult nudity. A multimodal discourse analysis approach is used and looks at both visual and textual expressions, thus revealing semiotic meaning.

For the analysis, a series of Instagram posts and responses from the 30th of July 2020 to the 25th of October 2020 are selected to take a materialist perspective on the discourse data to understand the elaboration of groups and power relations in (digital) social practices and context (Blommaert & Verschueren, 1998). The selected data are pivotal to understanding the ban’s more profound implications that point to an online community that mirrors society’s hegemonic ideology by excluding a person based on her skin colour and body type.

Instagram, Nyome, and the creation of deviance

Nyome initially posted her photograph on Instagram and it was removed by the platform for the alleged breach of the rules from their Community Guidelines. According to Becker (2008), rules are part of all social groups in society. The groups define what is considered appropriate behaviour for their members. The members have the right to enforce their rules, consequently punishing those who do not comply with the pre-established norms. By signing up for Instagram, one agrees with their norms and what is considered right and wrong behaviour by the platform.

Nyome Nicholas - Williams' post on July 30 2020 as reaction to her photographs being reported and banned.

Instagram’s decision to remove Nyome’s image automatically implies that her photograph somehow did not comply with the platform’s social norms. Whether it is through people reporting the image as inappropriate, or by the platform deciding that itself (through an algorithm), the removal creates deviance. The act itself does not define deviance; it is the result of the application of the rules and sanctions by others to the “offender” (Becker, 2008). That is, Nyome posting a bare upper body image does not create deviance. Rather, having her image considered offensive sexual content that breaches the norms that could lead to her account’s removal on Instagram creates the deviance and labels her as an outsider.

It is essential to understand that the people labelled as outsiders might think differently from the group who created the rules. Consequently, individuals don’t consider the rule-makers and ‘judgers’ competent to do so (Becker, 2008). That is the case when Nicholas-Williams, after the removal of her image, took a stance: “Let’s shift the narrative that the media and fashion has upheld for too long that depicts our bodies as somehow being wrong when that couldn’t be further from the truth!”. In the post, Nyome is sitting with her head up, staring straight into the camera. The image symbolizes, along with her words, that she is ready to face the challenges of claiming her space. In addition, her feeling of being a “strong and proud black woman” reinforces her pride to take a stance against the judgment of her as an outsider.

When a body is not understood I think the algorithm goes against what it's taught as the norm, which in the media is white, slim women as the ideal.

Nyome’s discourse stresses that there is a societal racial bias regarding plus-sized bodies. She claims that the media and fashion depict fat black bodies as being wrong. There is a clear us vs them group construction imposed on her by society. It is part of institutional racism and the acceptance of different treatment to people based on their ‘race’. Institutional racism can be found in various segments of society. Change has already been called for in the fashion industry by leading companies such as the Council of Fashion Designers of America (CFDA) and PVH, owner Calvin Klein and Tommy Hilfiger. They jointly fight the lack of diversity and unconscious racial bias to undermine in and outsider groups.

Nyome’s co-campaigners and their perspectives on racial bias

On 30 July 2020, Alex Cameron shared Nyome's take on the photograph and Instagram’s removal of the image. Her discourse as a professional photographer is a useful tool. A professional vision as discursive practices can create discourse and shape the perspective of events from an authority position using coding, highlighting, and producing material representations (Goodwin, 1994).

Alex Cameron’s Instagram post on July 30 2020, sharing Nyome’s photograph and that Instagram removed it from Nyome’s feed.

Cameron affirmed “I’ve never captured a photo where you can feel the energy through the photo… until now.”, “This image is real and bold and beautiful and art and f*ck anyone who sees differently.” She shares her satisfaction with the result of the photograph. She coded the work as a unique artwork and the most energetic photo she has produced so far, as she has never captured energy until that moment through a photograph. The photograph of Nyome sitting holding her breasts with her head up, eyes shut, and with a soft light upon her is her material representation that complements Cameron’s words.

On August 7, Alex Cameron posted two photographs she took. The first shows Nyome’s photograph and the second her own self-portrait “to illustrate censorship”. She wonders why her image - that is much more revealing than Nyome’s - is allowed whilst Nyome’s is subject to removal from the platform. She highlights her discourse by placing the images on the same post in a carousel so that the public can see Nyome’s photograph - coded as inappropriate - and one of her own for comparison.

Alex Cameron carousel-post on August 7 2020, to share in parallel for comparison Nyome’s banned photograph and her (non-banned) self-portrait.

"I cannot imagine Instagram deciding that the way I look is against community guidelines. I can't imagine how it feels to be repeatedly censored and targeted just for being her." This statement reinforces Cameron's perception that Instagram removed Nyome's photographs because of her body. That Instagram accepted Cameron's photograph, despite her self-portrait showing more of her body, as it did not seem to conflict with Instagram's Community's Guidelines, is recognised as problematic by Cameron. In her post on August 7, 2020, she uses the previously posted photograph (without any claim of misconduct by Instagram) as an evidential material representation for her accusation of Instagram's racial bias.

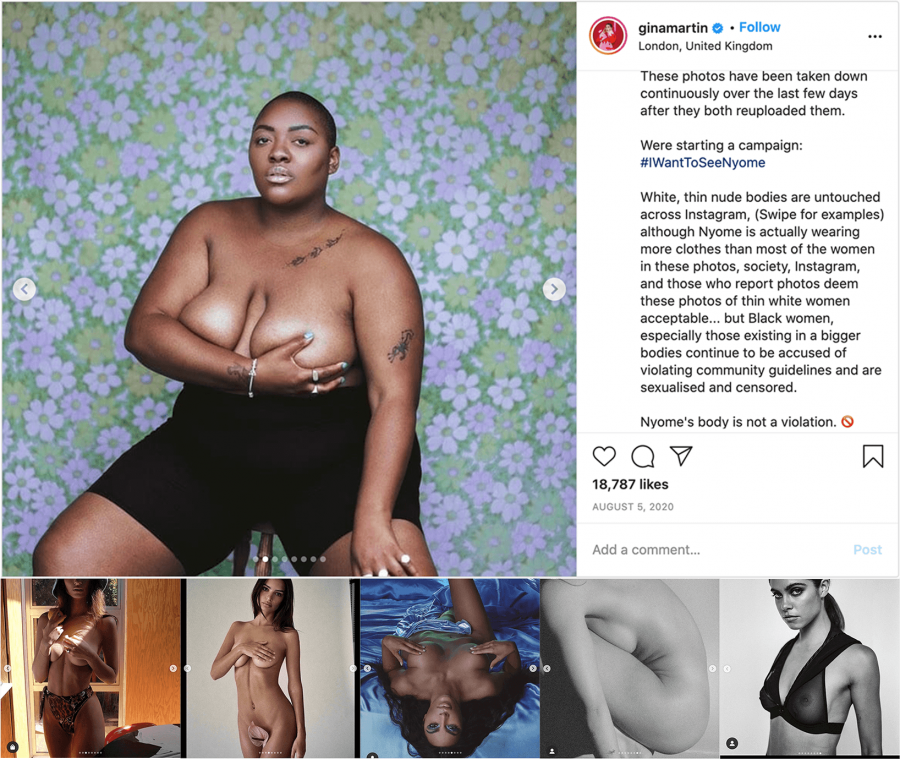

On August 5, activist Gina Martin posted on her Instagram account the following: “These photos have been taken down continuously over the last few days after they both reuploaded them. We're starting a campaign: #IWantToSeeNyome”. In the post, along with the text, there is a photograph of Nyome (from the specific photoshoot) and a compilation of photographs of other women previously published on Instagram on the same carousel. The images show women holding their breasts, having them hidden with the pose, or covered by transparent lingerie.

Gina Martin’s post and carousel of images used to launch the #IwantToSeeNyome campaign.

Martin’s images and text are her complementary material representation of the claim that “White, thin nude bodies are untouched by Instagram ... although Nyome is actually wearing more clothes than most of the women in these photos, society, Instagram and those who report photos deem these photos of thin white women acceptable… but Black women, especially those existing in bigger bodies continue to be accused of violating community guidelines and are sexualised and censored. Nyome’s body is not a violation.”

Like Cameron, Martin (@ginamartin) sees Instagram’s action as racially-biased by showing how people who fit society’s institutionalised beauty ideals are accepted by Instagram (even if they are also holding breasts or showing more of their bodies). By allowing images such as Alex’s nude self-portrait or those of skinny celebrities, Instagram inevitably constructs discourse: the hegemonic beauty defines what is normal and allowed on the platform. As such, what deviates from that ideal is a violation of the norm, for instance, Nyome’s image. The material representations provided by both Martin and Cameron are strong evidence of a potential racial bias. What happened coincides with society’s action towards black fat bodies. Black fat bodies are controversial, they are not hegemonic, and society is not accustomed to seeing them on fashion magazine covers, or in advertisements for luxury brands.

Black fat bodies are controversial, they are not hegemonic, and society is not accustomed to seeing them on fashion magazine covers, or in advertisements for luxury brands.

The removal of the image online is a reflection of what already happens in society offline. People who are part of the hegemony are the norm, represented everywhere and their images are accepted. People who are not the norm have their images removed, plus the threat of being removed from the platform. Those who don't 'fit in' are excluded and underrepresented. As such, Nyome’s co-campaigners see and call out the deviance created when Instagram codes Nyome’s photographs as violating Community Guidelines and, consequently, removing them from the platform. Gina and Alex campaign for Instagram to reevaluate their (algorithmic) norms and policy concerning racial bias.

Reinforcement of racial bias through algorithms

Instagram employs thousands of content moderators to review the huge amounts of visual and textual content on its platform. They are supported by Instagram users who can report whether the content is spam or inappropriate for reasons like nudity, hate, violence, intimidation, fraud, or violating intellectual property. Moderators consequently decide whether content violates the community standards.

Algorithms are (racially) biased because of the data humans give it

The algorithms automatically pre-examines (reported and not reported) content to flag violations itself to reduce the workload and speed up the process for content moderators. It is unclear whether Instagram employs algorithms and artificial intelligence that fully independently performs decision-making tasks like deleting, ignoring, or escalating, but it surely plays a great role in dealing with scale in Instagram’s moderation process.

Artificial intelligence and as such algorithms that automatically seek to find patterns in data such as text and images, rely on data and human input on what violates community standards (Binns, Veale, Van Kleek,& Shadbolt, 2017). In other words, for artificial intelligence to understand and flag what complies with or violates the community standards, it needs to be given examples.

As such, algorithms embody judgement traits of human moderators that feed artificial intelligence. Algorithms pick up on and reflect flawed human decisions as well as racial bias (Davidson, Bhattacharya, & Weber, 2019; Silva & Kenney, 2019) of users reporting content and moderators (Gillespie, 2020). Algorithms are (racially) biased because of the data humans give it which causes an amplifying effect in a digital society in which norms are formed, reproduced, and enforced online.

In Nyome’s words “Algorithms on Instagram are bias[ed] against women, more so Black women and minorities, especially fat Black women. When a body is not understood, I think the algorithm goes against what it’s taught as the norm, which in the media is white, slim women as the ideal.”.

Public recognition of racial bias on Instagram

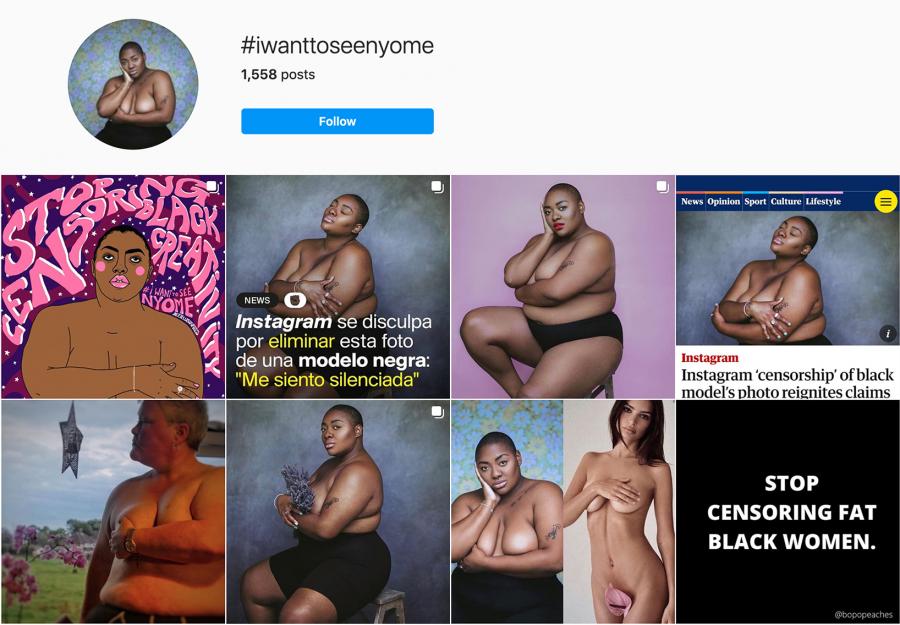

The #IWantToSeeNyome campaign on Instagram launched on the 5th of August 2020 by Nyome, Alex, and Gina resulted in over 1500 reactions to date. Endorsements for the campaign initially started in the form of people reposting Nyome’s photographs of which some of them were banned for reasons of infringing Instagram’s community guidelines.

Selection of user generated content on Instagram’s #IWantToSeeNyome grid.

In the weeks following the first post, people posted support in various forms: statements, images of themselves (both white, black, slim, and fat women) in similar positions of holding breasts while being naked, reposts of newsletter uptake like those of the Guardian, artistic expressions, and posts that point out that Instagram bans Nyome while not acting with slim white women like Emily Ratajkowski (@emrata) posing naked; reinforcing the racial bias claim.

Besides campaigning via Instagram, Nyome, Alex, and Gina launched an online petition on change.org. With the petition to “stop censoring fat black women”, they acquired over 15.000 signatures in the first month. The three women, strategizing the campaign, further sought mainstream media by publishing in Harper’s Bazaar themselves to create awareness and subsequently reinforce their discourse in interviews with, for example, the Guardian (Iqbal, 2020), Independent, and Refinery29. Part of their campaign was the open letter written to Instagram CEO Adam Mossori, for which they found public endorsement from sixteen co-signers.

Digital activism for inclusiveness

Although Instagram was the instigator by removing the image, it was also where the #IWantToSeeNyome campaign lifted off. It was a place where Nyome was considered an outsider, where the discussion started and raised the question of why Instagram accepted some bodies and some not, and where a group formed to advocate for Nyome’s photograph’s inclusion and inclusivity. A group of people sharing images and generating content such as graphic modifications, edited images, and other pictures that Instagram allowed to illustrate the racial bias claim.

I cannot imagine Instagram deciding that the way I look is against community guidelines. I can't imagine how it feels to be repeatedly censored

This way, the campaign on Instagram represented something more profound than just one photograph taken down. It was a request for equal treatment for people who feel like outsiders on Instagram because they are not part of the hegemonic group. The campaign supporters wish the equal right to post a photograph that will not be taken down simply because it is not seen as normal to Instagram's standards (read: skinny and white).

The campaign on Instagram was important in propelling discourse for inclusivity. With only 1500 posts, it is most likely not solely responsible for the policy change at Instagram with over 800 million users. It was the organised campaign of Nyome, Alex and Gina via digital and mainstream media, and algorithmic activism that paved the way.

Instagram’s manoeuvre in tackling racial bias allegations

On the 25th of October 2020, Nyome shared and celebrated the news that Instagram changed its policy following the #IWantToSeeNyome campaign. In her words to the Reuters Thompson Foundation, “overall I’m very glad about the policy change and what this could mean for Black plus-size bodies”.

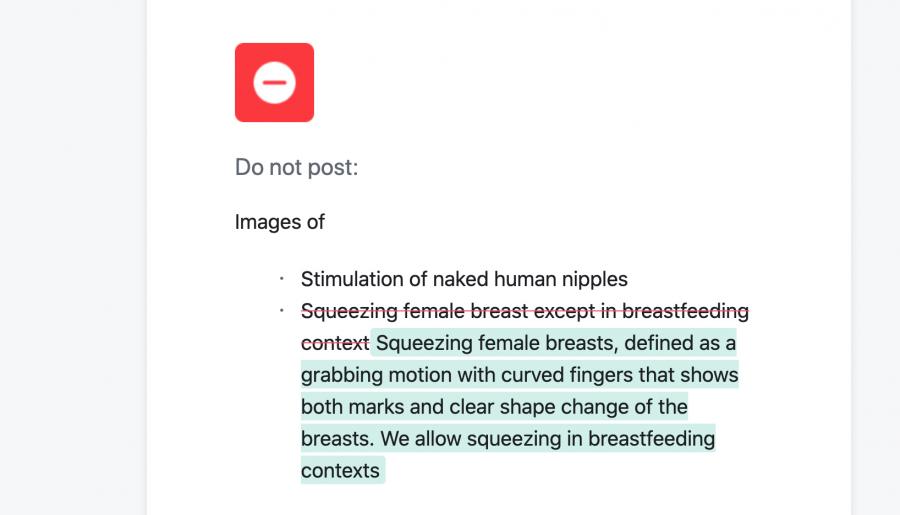

The policy change was applied to both Instagram and its parent company Facebook and explicitly forbids images that show the “squeezing of female breasts, defined as a grabbing motion with curved fingers that shows both marks and clear shape change of the breasts. We allow squeezing in breastfeeding contexts” replacing the former policy that forbids “squeezing female breasts except in a breastfeeding context”.

Snippet from Instagram's policy change as response to the #IWantToSeeNyome campaign against racial-bias on Instagram

The policy change does not show recognition or countermeasures particular to racial bias and/or body-type exclusion. Rather, it specifies what breast squeezing concerns, thus allowing Nyome’s images on the platform as they do not violate the renewed policy, i.e., the photos don’t display squeezing but holding the breasts.

In a statement, Kira Wong O’Connor, Instagram’s head of policy told Independent: “We know people feel more empowered to express themselves and create communities of support – like the body positivity community – if they feel that their bodies and images are accepted”. Instagram has not responded directly to the charges of racial bias. Instagram does tie this policy change to the work of Nyome as Kira said: “We are grateful to Nyome for speaking openly and honestly about her experiences and hope this policy change will help more people to confidently express themselves. It may take some time to ensure we’re correctly enforcing these new updates but we’re committed to getting this right”. By doing so, Instagram moves the discourse from racial bias to empowering self-expression in, for example, body positivity communities.

Even though Instagram stated that “Separate to this policy change, earlier this year we committed to broader equity work to help ensure we better support the Black community on our platform.”, referring to Adam Mossori’s Instagram post in June 2020, it is unclear whether (algorithmic) racial bias on Instagram has been tackled with the policy.

References

Becker, H. S. (2008). Outsiders: Studies in the sociology of deviance. Simon and Schuster.

Binns, R., Veale, M., Van Kleek, M., & Shadbolt, N. (2017). Like trainer, like bot? Inheritance of bias in algorithmic content moderation. In International conference on social informatics (pp. 405-415). Springer.

Blommaert, J., & Verschueren, J. (2002). Debating Diversity: Analysing the discourse of tolerance. Routledge.

Cameron, D. (2001). Working with spoken discourse. Sage.

Davidson, T., Bhattacharya, D., & Weber, I. (2019). Racial bias in hate speech and abusive language detection datasets. Proceedings of the Third Workshop on Abusive Language Online. doi:10.18653/v1/w19-3504

Gillespie, T. (2020). Content moderation, AI, and the question of scale. Big Data & Society, 7(2).

Goodwin, C. (1994). Professional Vision. American Anthropologist, 96(3), 606-633.

Iqbal, N. (2020, August 09). Instagram 'censorship' of black model's photo reignites claims of race bias. Retrieved from www.theguardian.com

Nicholas - Williams, N. (2020, August 07). Dear white people, my body is not for you to take and profit from. Retrieved from www.harpersbazaar.com

Silva, S., & Kenney, M. (2019). Algorithms, platforms, and ethnic bias. Communications of the ACM, 62(11), 37-39.