The responsibility gap: Using robotic assistance to make colonoscopy kinder

Nowadays, technology and medicine go hand in hand. New technologies like robotic assistance have the potential to significantly improve medical care and provide better, if not brand new, treatments for patients. However, technology is not always beneficial. Although technology may provide a better solution, it can also come with unpredictability and a lack of control.

In this paper I will examine the newest development in performing colonoscopy: using robotic assistance to carry out the procedure. But who bears responsibility for any mistakes made during this new intervention?

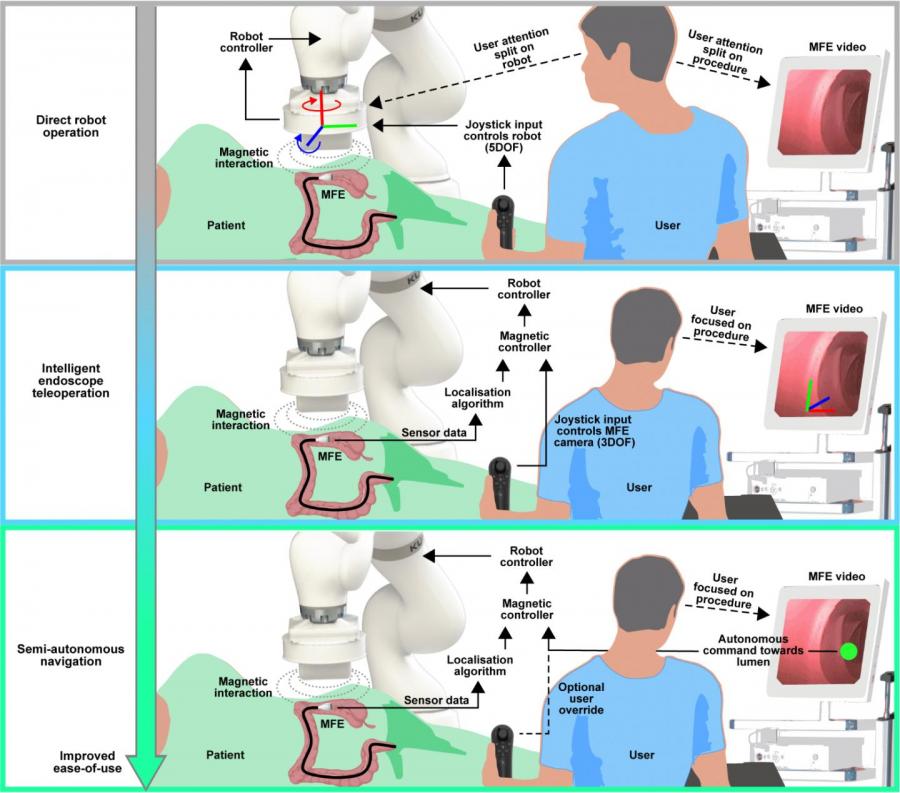

Figure 1: The AI device for colonoscopy

Robotic assistance

Scientists from the University of Leeds in the UK have created a “semi-autonomous robotic system" to perform colonoscopies. They claim their system is easier for doctors and nurses to operate and less painful for patients (Mack, 2020). The robotic system involves a “smaller, capsule-shaped device on the end of a cord” that is navigated into the patient from a robotic arm equipped with magnets, which can be controlled by doctors with a joystick (Mack, 2020, Figure 1). 'Semi-autonomous' means the machine is responsible for manipulating the capsule-shaped device into the patient. Even though doctors can intervene with the joystick, the robotic arm has semi-autonomous capacities and will operate itself.

This groundbreaking improvement in colonoscopy might sound ideal, however, the possibility of error remains high since joysticks, just like any technical device, come with the uncertainty of making mistakes. Joystick unresponsiveness in crucial situations could lead to serious physical harm or even a threat to patients' lives. This begs the question, in the case of harm, is the creator or operator responsible?

Responsibility Gap

If the responsibility lies with medical staff, then mistakes in using the robotic arm are theirs. This implies that they should know how the device works. But since they are not the programmers of the device's AI, this argument does not hold.

It could be argued that the creators are responsible, since they created the device. However, scientists have transferred control of the device to medical staff “by specifying the precise set of actions and reactions the device is expected to undergo during normal operation” and the ability to handle the device in a predictable manner (Matthias, 2004). Therefore scientists have reduced their control over the finished product, which means that they “bear less or no responsibility” because they are unable to check for errors (Matthias, 2004). It seems that neither of these sides are responsible for errors, which is called the “responsibility gap” problem.

Robotic responsibility

However, there is a third option, in which the AI bears the responsibility. This scenario is highly neglected - how can a machine be held accountable for its actions? However, robots can be moral agents if they fulfill three qualities: the robot has sufficient autonomy, its behavior is not intentional, and it is in a position of responsibility (Sullins, 2006). In this case, firstly, the robotic arm does not have enough autonomy since it is controlled by the doctors with a joystick. Secondly, “intentionally” refers to whether a complex interaction between the robot’s programming and the environment is present, causing the machine’s actions to be seemingly calculated (Sullins, 2006). This is not the case here since following the commands of a joystick is not a complex interaction. Thirdly, to be in a position of responsibility, the robot must be morally responsible to other agents, which is hardly the case as the robotic system is designed to follow a step-by-step procedure, rather than making decisions concerning other people (Sullins, 2006). Since the robotic arm does not meet the criteria, it is not a moral agent and hence not responsible.

Conclusion

Looking at all available options, it seems that the problem of the responsibility gap is far more complex since, in situations like the one presented in this paper, ascribing responsibility to someone seems rather impossible. However, this gap can be solved by identifying

“a process in which the designer of a machine increasingly loses control over it, and gradually transfers this control to the machine itself. In a steady progression the programmer role changes from coder to creator of software organisms” (Matthias, 2004).

References

Mack, E. (2020). This Robot Could Perform Your Colonoscopy In The Near Future. Forbes.

Matthias, A. (2004). The responsibility gap: ascribing responsibility for the actions of learning automata. Ethics and information technology, 6(3), 175–183.

Sullins, J. (2006). When Is a Robot a Moral Agent? International Review Of Information Ethics, 6.