Should big data determine who gets pulled over?

Policing, big data and stochastic governance

Predictive policing on the basis of big data and algorithms is generally claimed to be a technical matter superior to more traditional policing, with all its human flaws. Paul Mutsaers argues in this column for a cultural approach to predictive policing, which pays attention to the social and cultural shaping of police technologies.

Predictive Policing

Big data exert a strong gravitational pull on police forces around the world. As police legitimacy grows dependent on effectiveness and efficiency—often at the expense of fairness and justice considerations—predictive policing is rolled out on an unprecedented scale. The underlying idea is that officers hardly ever arrive on time at a crime scene to catch a crook and therefore have to engage in the business of risk-profiling to predict crime and take pre-emptive measures. Risk-profiling is something that police officers have always done, walking their beat and using their discretionary authority, but what distinguishes 21th century profiling from older forms is the use of big data, algorithms and data science. Probabilistic methods are replacing police intuition, algorithms substitute brains, and cameras the human eye. We are entering the age of “stochastic governance”: ‘the governance of populations and territory by reference to the statistical representations of metadata’ (Sanders and Sheptycki 2017).

Stochastic governance is the governance of populations and territory by means of statistical representations of metadata

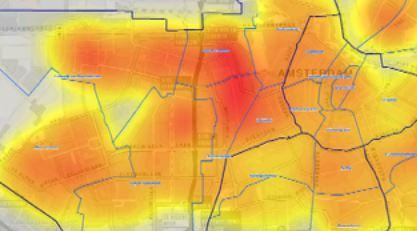

‘Good,’ one may reply, perhaps in reference to the Rodney King beating in LA, the death of Michael Brown in Ferguson, the police chokehold of Mitch Henriquez in The Hague, or other examples that render police discretion one of the major problems of our time. If new technologies can help to make the police more rational: ‘hallelujah!’ And this is exactly how advocates of predictive policing respond: ‘the software performs calculations based on the collective memory of the police force, which is far more than any individual is capable of,’ says Arnout de Vries at TNO about the Crime Anticipation System (CAS) developed and used by the Amsterdam police. ‘Our day-to-day operations tool identifies where and when crime is most likely to occur so that you can effectively allocate your resources and prevent crime,’ advertises PredPol (The Predictive Policing Company) on its website.

Hot spots in Amsterdam

Anticipating criticism, advocates of predictive policing are swift in telling their audiences that their tools are not perfect…at least not yet. De Vries, for instance, says that ‘the system is also susceptible to racial profiling: mechanisms that result in more frequent arrests of individuals with an ethnic background’ (it is telling that this implies that native Dutch citizens do not have an ethnic background). His remedy is a technical one: ‘you have to be aware of the nature of the data being fed into the system, and you have to know what types of data are lacking.’ This is how technicians tend to reason; they are not concerned with the problems outside the delimited sphere that is assigned to them, which prevents them from taking up a critical role in society.

Risk and Culture

Others before me have pointed at the social costs and effects of profiling (e.g. Harcourt 2007) and I have said a thing or two about these matters at Diggit Magazine. This time, however, I wish to focus not on the social effects of profiling but on its social shaping. The argument of technicians is that risks can be optimally managed by the perfection of technical knowledge, but I believe that this is a poor way of thinking about risk—which has unfortunately become mainstream in the risk literature. I tend to agree with Mary Douglas’ work on Risk and Culture (1987, 1992; Douglas and Wildavsky 1982) which we can use to think about risk profiling, and predictive policing more generally, as a cultural activity in which officers draw on the social environment to make sense of the world and have it operate on the basis of selected norms concerning classifications of danger, deviancy and delinquency. If we define culture as a pattern of meaningful organization that allows people to make sense of their world and have it operate as is should on the basis of social norms, we understand that risk-profiling is very much a cultural process.

Risks are always culturally conditioned ideas

Douglas argues that risks are always culturally conditioned ideas (no matter how technical the calculations involved), which are selected for public concern according to the strength and direction of social criticism. As such, risks are the outcome of cultural classifications that demarcate lines of division, which in turn help to legitimate a particular social order that rejects inappropriate elements in society. So, by focusing on the cultural normativity of risk profiling, we can develop new ways to explain how it changes over time, not only due to technological innovations but also because of changing social influences that bias the search for culpable agents accordingly.

I hope, therefore, that a time will come in which the monopoly of the technician is ended by those who adopt cultural approaches to risk. Only then can we begin to understand how risk profiling and other forms of “actuarial justice” are changing our legal cultures. PredPol and other key players keep saying that predictive policing is not Minority Report, but the fact that criminal law is increasingly driven by the technical progress to predict crime in the most parsimonious way, is in itself a symptom of cultural change, a new model of human organization, that must be explained.