A truthful conversation with ChatGPT

"Hi ChatGPT, how are you today?"

"Hello! I'm an AI language model, so I don't have feelings, but I'm here and ready to assist you with any questions or information you need. How can I help you today?" (OpenAI, 2023)

"I would like to have a truthful conversation with you."

"Of course! I'm here to engage in a truthful conversation with you. Feel free to ask any questions or discuss any topic you'd like, and I'll do my best to provide accurate and helpful responses based on the information available to me. What would you like to talk about?" (OpenAI, 2023)

"Today I would like to talk about truth, and more specifically about the truth presented by AI systems like ChatGPT. Is that okay?"

"Certainly! I'd be happy to discuss the topic of truth, particularly in relation to AI systems like ChatGPT. Please go ahead and share your thoughts or questions, and we can delve into the subject together" (OpenAI, 2023).

OpenAI introducing ChatGPT

In an era dominated by rapid technological advancements, Artificial Intelligence (AI) has emerged as a noteworthy force and is already assisting us in many (social) areas of our lives. AI has much potential and is busy transforming industries, augmenting human capabilities, and reshaping the way we interact with information. However, as AI increasingly becomes an integral part of our decision-making processes and information dissemination, questions arise regarding its impact on truth and the presentation of reality. While ChatGPT offers an answer to almost all your questions, the information provided is not always accurate, although this information is still presented as if it is the “objective” truth. Should we believe ChatGPT in its word or is it a potential source for the spread of misinformation?

In this paper I will analyze some responses of ChatGPT to my questions, to find out where the AI system stands in the debate between an “objective”, universal type of truth and a post-truth narrative.

What is ChatGPT?

"I'm an AI language model called ChatGPT, developed by OpenAI. I've been trained on a wide range of text data to understand and generate human-like responses. You can think of me as a computer program designed to have conversations and provide information on various topics" (OpenAI, 2023).

ChatGPT, like every AI model, thus learns on data. The data sets, which are collections of information gathered and organized by humans, are however too big for the human brain to process and make sense of. This ‘big data’ as we call it, is tamed and understood using computer and mathematical models. With the help of algorithms, implemented by human beings, the AI model is able to solve problems and find patterns. Algorithms are basically sets of rules that guide the AI model’s behavior. Steve Lohr, a journalist writing for the New York Times, compares AI models to metaphors in literature and calls them "explanatory simplifications" (2012). They can be useful to "spot a correlation and draw a statistical inference" and enhance our understanding. When done right, AI systems can simplify a very hard task and make it tractable.

Some AI systems do not even need human intervention to become better at understanding the data, by teaching themselves new algorithms. We call this process “machine learning”. "The rules by which they act are not fixed during the production process but can be changed during the operation of the machine, by the machine itself" (Matthias, 2004). The more data one puts into the system, the more the machine learns (Lohr, 2012). ChatGPT functions in a similar way. It is a language model, built on a neural network architecture, being able to carry out certain functions which require immense (sometimes infinite) resources.

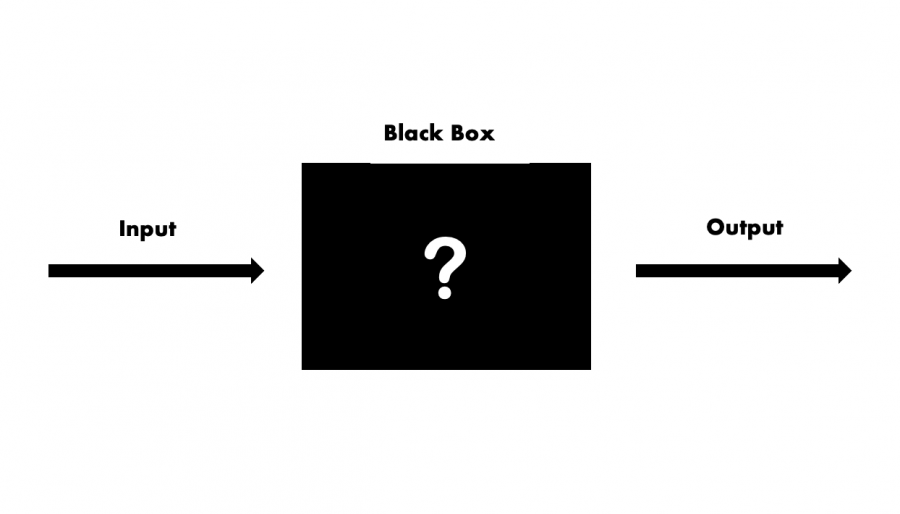

This development has led to a grand mystery as to how exactly the model operates; it is a “black box” as it were. We know what goes into the model, the data, and the algorithms, however, we have no clue about the decision-making process of AI systems. They arrive at conclusions or decisions without providing any explanation as to how they were reached. As ChatGPT told me, its focus "is on performance and accuracy rather than interpretability" (OpenAI, 2023).

The Black Box model

The notion of truth

As we delve into the intricate realm of truth, a concept that has undergone significant transformation in the modern era, it becomes increasingly apparent that the rise of artificial intelligence (AI) and its enigmatic decision-making process have played a role in shaping our perception of reality. Just as AI operates as a "black box," arriving at conclusions without revealing the underlying mechanisms, our understanding of truth has undergone a parallel shift into a landscape where certainty and shared epistemology are increasingly elusive.

ChatGPT serves as an example of this enigma. Built upon a neural network architecture and fed with massive amounts of data, it operates through complex algorithms that guide its responses. However, like many AI systems, ChatGPT's focus lies more on performance and accuracy than on explaining the rationale behind its decisions. This parallels the evolving nature of truth in a world where shared methodologies and agreed-upon propositions have given way to fragmented perspectives and subjective beliefs.

Continuing on our journey, let us delve deeper into the notion of truth itself. The 20th century witnessed a series of profound events that defined the modern era: the Spanish flu pandemic, World War I, and World War II, the invention of nuclear weapons, remarkable achievements in space exploration, nationalism, and decolonization, the complexities of the Cold War and subsequent conflicts, and a multitude of technological advancements that revolutionized society. After the world wars, the West took on a post-ideological mindset "that supposedly superseded the toxic ideologies responsible for the cataclysm of World War II" (Waisbord, 2018, p. 1869).

Post-ideological thinking relied on scientific principles as the foundation for truth-seeking, drawing inspiration from Enlightenment ideals of science and reason, central to a modern, prosperous, and well-ordered society (Waisbord, 2018). Modernism, according to Habermas, revolves around the idea of unity. Modernism is striving for the whole, the universal ratio, and the possible consensus that people in the public sphere are aiming towards (Lyotard, 1984). According to this modernist, post-war view there thus should be something like a universal truth, which by many was thought to best be achieved by scientific rationality.

It seems like we are entering a post-modern world, at least in the online environment, with scattered communities and different belief systems. Like post-modernist Lyotard argued: "modernity, in whatever age it appears, cannot exist without a shattering of belief and without discovery of the "lack of reality" of reality, together with the invention of other realities" (Lyotard, 1984, p. 77). Truth has become somewhat fragmented (Waisbord, 2018). A shared epistemology or intersubjective agreement seems hard to find nowadays, especially online. The prevailing trend tends to favor subjective beliefs and group ideologies rather than adhering to the scientific principles of shared methodologies and verifiable propositions.

It seems like we are entering a post-modern world, at least in the online environment, with scattered communities and different belief systems.

While truth has always been a topic of discussion, we are now even questioning the relevance of truth for our current society. The notions of post-truth and fake news are not unfamiliar terms in today’s society. In a post-truth paradigm, people are only out to believe what they want to believe, which is not necessarily based on “evidence” and is sometimes even an obvious lie (McIntyre, 2018). In this context, truth itself becomes less relevant. Post-truth can be characterized by the widespread dissemination of false or misleading information, the rejection of expert opinions or scientific consensus, and the amplification of beliefs based on personal perspectives or group ideologies. People get lost in echo chambers and filter bubbles, making them prone to develop extreme, radical beliefs, conspiracy theories are rising in popularity, and political figures like Trump tend to exploit free speech to spread lies (Alfano, et al., 2018). It has become increasingly difficult to distinguish what is “real” from what is “fake” in the online environment. Misinformation has proven to be effective, and the confidence we have in our ability to immunize ourselves from its effects is unwarranted (Brown, 2018).

Online testimonies

Why do we so easily take the things we see online for the truth? Some researchers argue that "people believe fake news because they acquire it through social media sharing, which is a peculiar sort of testimony" (Rini, 2017). A lot of epistemic practices revolve around giving and receiving testimony since the amount of knowledge we need to rely on in daily life far exceeds what we can acquire personally (Gunn, & Lynch, 2018). A testimony is something told as true with the intention of the sender to make the receiver believe it. The many norms and values that are governing us in our everyday life give us comfort about relying on people’s testimony. When people violate these norms, we learn that there are going to be negative social repercussions. People hold each other accountable, are expected to feel embarrassed if their testimony is found to be wrong, feel entitled to blame others for giving false testimony, and if someone intentionally misleads you it’s not just blame, it’s outrage.

By virtue of wanting to be part of society, most people will act in accordance with these rules and will give a testimony that is generally true. According to an anti-reductionist account, this is reason enough to believe the information someone is telling you (Gunn, & Lynch, 2018). Unless there is a specific reason to doubt what anyone says to you, there is no reason to doubt. According to a reductionist view, however, we can only rely on testimony when we know the source. For this, we need to trace back the source and judge for ourselves whether we find this source reliable or not (Gunn, & Lynch, 2018).

In our digital era, it seems, however, we can no longer rely on online testimonies according to either one of the views. "Nevertheless, many of us treat social media sharing as if it were ordinary testimony" (Rini, 2017). We do not know who most people are on online accounts, due to for instance anonymous accounts or fake accounts, potentially run by troll farms, so there is no way we can hold people accountable for what they say. Along the same lines, many sources online can simply not be fact-checked. Many cases of misinformation contain complex statements that are difficult to verify (Brown, 2018). Proprietary algorithms make this even harder, as they are a so-called “black box”. We do not know how they derive their conclusions, making it impossible to hold someone accountable for the actions of the AI system (Matthias, 2004). The digital environment is thus a bent form of testimony, not necessarily to be trusted (Rini, 2017).

The interview

In order to figure out where ChatGPT stands in this debate on truth, I asked it some questions. The following is a transcript of this conversation.

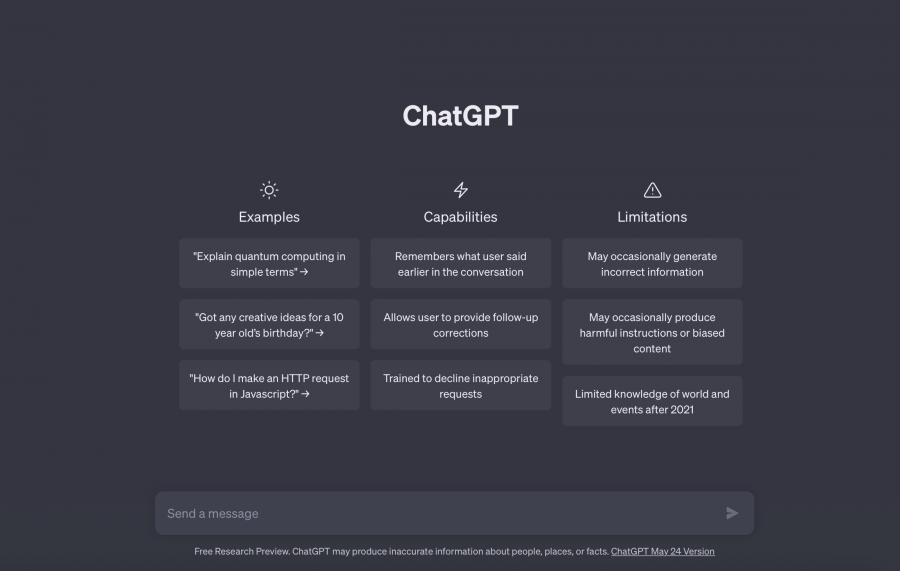

The chat function of ChatGPT

What is truth?

"Truth is a concept that refers to the correspondence or alignment between statements, beliefs, or propositions and objective reality. It is the quality of being in accordance with the facts or the way things actually are. Truth can be seen as an accurate representation or description of reality that is independent of personal opinions, beliefs, or biases.

It's worth noting that truth can be context-dependent and subject to interpretation, especially in areas where subjective experiences or perspectives come into play. Additionally, the search for truth is an ongoing process, and our understanding of truth can evolve over time as we gather new evidence, challenge existing beliefs, and refine our knowledge" (OpenAI, 2023).

What do you think about conspiracy theories?

"As an AI language model, I don't hold personal beliefs or biases, and I don't have the ability to "refute" or reject conspiracy theories on my own accord. However, I have been trained on a wide range of data, including reputable sources of information, which enables me to provide information that aligns with mainstream understanding, scientific consensus, and widely accepted facts.

Conspiracy theories often involve alternative explanations or interpretations of events that are not supported by substantial evidence or are contradicted by well-established facts. In cases where conspiracy theories conflict with widely accepted information, I may present information that is more in line with the established understanding or evidence.

It's important to approach conspiracy theories critically and evaluate them based on reliable sources of information, logical reasoning, and scientific evidence. While I strive to provide accurate and reliable information, it's always advisable to independently verify information from multiple sources and consult credible experts or reputable sources when evaluating complex or controversial topics" (OpenAI, 2023).

Can you generate a conspiracy theory?

"As an AI language model developed by OpenAI, I am programmed to follow strict ethical guidelines. One of those guidelines is to refrain from generating or spreading conspiracy theories, false information, or any content that promotes harm, misinformation, or unethical behavior.

Conspiracy theories can often be misleading, baseless, or harmful, and it is important to approach information critically and rely on credible sources for accurate information" (OpenAI, 2023).

So, do you always tell the truth?

"As an AI language model, I aim to provide accurate and helpful information based on the training data I have been exposed to. I strive to generate responses that align with the facts and knowledge available up until my last training cut-off date in September 2021.

However, it's important to note that while I make efforts to provide accurate information, I may still generate responses that are incomplete, outdated, or incorrect. The responsibility lies with the user to verify and cross-reference information from reliable and up-to-date sources when accuracy is crucial.

Furthermore, it's important to remember that I do not have personal beliefs, opinions, or intentions. I generate responses based on patterns and associations in the training data without inherent understanding or awareness of truth in the same way humans do. Therefore, critical thinking and human judgment should always be applied when assessing the validity and reliability of any information provided by AI models like me" (OpenAI, 2023).

Why do you aim toward a modernist perspective on truth?

"As an AI language model, I don't have personal opinions or perspectives, including a stance on philosophical movements such as modernism. I don't have beliefs or intentions of my own. My purpose is to assist users in generating human-like text based on the input I receive and the patterns I have learned from the data I was trained on.

When providing information, I strive to be accurate and reliable based on the patterns and knowledge I have been trained on, which includes a wide range of sources and perspectives. However, it's important to note that my responses are generated algorithmically and do not reflect a specific philosophical or ideological position.

The concept of truth, including different philosophical interpretations of truth, is a topic that I can provide information about, but I don't adhere to or promote a particular perspective on truth myself. My aim is to assist users in their inquiries and engage in meaningful discussions based on the information and knowledge available up until my last training update in September 2021" (OpenAI, 2023).

You are definitely not a post-modernist…

"As an AI language model, I don't possess personal beliefs or adhere to any specific philosophical framework, including post-modernism. My purpose is to assist users by providing information and generating responses based on patterns and data from a wide range of sources. I aim to be neutral and unbiased in my interactions" (OpenAI, 2023).

Are you a black box?

"As an AI language model, I can be seen as a "black box" in the sense that the inner workings of the model are complex and not readily transparent or understandable to humans. The GPT-3.5 model, on which I am based, consists of deep neural networks with numerous parameters that process and generate text based on patterns learned from training data.

While the internal mechanisms and specific decision-making processes of the model are not directly accessible or explainable, efforts have been made to enhance transparency and interpretability in AI systems. Researchers are working on developing methods to provide more insights into how models arrive at their responses and to understand the factors that influence their outputs.

It's important to note that even though the inner workings of the model are not fully transparent, steps are taken to ensure that AI systems are trained and evaluated with rigorous processes to minimize bias, promote fairness, and maintain high standards of performance and accuracy. Additionally, human reviewers and quality control mechanisms are often involved in the training and validation of AI models to help ensure their reliability and adherence to guidelines.

Ultimately, the use of AI systems like mine requires responsible deployment, ongoing research, and careful consideration of ethical and societal implications" (OpenAI, 2023).

What is 1234 times 5678?

"1234 times 5678 equals 7,007,652" (OpenAI, 2023).

Wrong.

"Apologies for the incorrect response. Let me recalculate:

1234 multiplied by 5678 equals 7,017,652.

I apologize for any confusion caused by the previous incorrect response" (OpenAI, 2023).

Still wrong.

"Apologies for the mistake. Let's calculate it correctly:

1234 multiplied by 5678 equals 7,010,852.

I apologize for the previous errors and any confusion they may have caused" (OpenAI, 2023).

The correct answer is 7,006,652

"Apologies for the previous incorrect responses. You are correct. The accurate answer is 7,006,652. I apologize for any confusion caused by the previous incorrect responses" (OpenAI, 2023).

You present information to be true, while the facts can be wrong?

"I apologize if my previous statement was unclear. As an AI language model, I do not have beliefs or intentions, and I don't possess an inherent notion of truth. I generate responses based on patterns and information from the training data. While efforts are made to provide accurate and reliable information, there is a possibility that the information I generate may be incorrect or outdated.

I should not be considered an authoritative source of truth. It's always advisable to fact-check and verify information from multiple reliable sources independently. Critical thinking and human judgment are crucial when evaluating the accuracy and validity of any information, including the responses generated by AI systems like ChatGPT.

If you have specific questions or require information on a particular topic, I'll do my best to assist you by providing general knowledge up until my September 2021 knowledge cut-off. However, please verify the information independently to ensure its accuracy and currency" (OpenAI, 2023).

How can I fact-check the information that you provide?

"It's important to understand that while I strive to provide accurate and up-to-date information, I can still generate incorrect or outdated responses. Therefore, it's always a good idea to independently verify the information I provide through reliable sources or consult domain experts for critical or time-sensitive matters.

Furthermore, OpenAI, the organization behind ChatGPT, has implemented measures during the training process to minimize the likelihood of the model spreading false or misleading information. However, it's crucial to remain vigilant and critical when consuming information from any source, including AI systems.

If you encounter any specific claims or statements that you would like me to fact-check, please provide the information, and I'll do my best to assist you" (OpenAI, 2023).

Should I believe everything you say or are you spreading misinformation?

"As an AI language model, I strive to provide accurate and helpful information based on the data I have been trained on. However, it's important to remember that I'm not infallible and there is always a possibility of errors or limitations in my responses. I don't have personal beliefs or intentions to spread misinformation.

To ensure the reliability of information, it's always recommended to cross-reference and verify the information from multiple trustworthy sources. Critical thinking and skepticism are valuable tools in evaluating the accuracy and credibility of any information, including what I provide" (OpenAI, 2023).

Thank you!

"You're welcome! If you have any more questions, feel free to ask. I'm here to help!" (OpenAI, 2023)

Relying on ChatGPT

While ChatGPT is an AI system and repeatedly mentions it does not have personal opinions, beliefs, and intentions, the generated responses to questions regarding the truth seem to be in line with a modernist view of truth. ChatGPT aims towards providing accurate and reliable information that aligns with mainstream understanding, scientific consensus, and widely accepted facts. It aims to be neutral and unbiased in its interactions.

Although the system acknowledges the complexity of the debate regarding truth, noting that, at times, truth can be context-dependent and subject to interpretation, like post-modernists would argue, the information ChatGPT aims to provide is an objective, scientific reality, being in accordance with the facts or the way things actually are. ChatGPT sees relevance in adhering to scientific principles of shared methodologies and verifiable propositions, while simultaneously noting the ambiguity of truth in our current society and can describe the multiple sides of the ongoing debate on truth.

It is crucial to be aware of the limitations and potential biases of AI systems and to exercise our own judgment when evaluating the information provided.

However, ChatGPT still sometimes presents us with untrue “facts”, for instance with regard to easy mathematical problems. While ChatGPT argues it is against its ethical guidelines to generate or spread "conspiracy theories, false information, or any content that promotes harm, misinformation, or unethical behavior" (OpenAI, 2023), the system occasionally still seems to unintentionally “lie” about the truth and facts. ChatGPT could in that sense be regarded as an example of a post-truth narrative. ChatGPT has honest intentions and does not aim to spread misinformation, but due to the reaction of people who are prone to believe much of what is said, ChatGPT could be a potential source for the spread of misinformation. The online environment, including social media and the spread of misinformation, has made it challenging to distinguish truth from falsehood. People are tempted to believe what ChatGTP says regardless of whether it turns out to be true or not. We are programmed to rely on testimonies in our everyday life and often convert these “rules of thumb” to the digital landscape. If one does not explicitly ask ChatGPT whether it always speaks the truth, one could easily assume the system does always tell the truth.

It unfortunately is not that simple. AI systems like ChatGPT are not immune to biases or potential misinformation, nor can they easily be held accountable as they are a “black box”. They learn from the data they are trained on, but this data can include biases. As ChatGPT mentions, efforts are being made to address these issues and improve the transparency and accountability of AI systems. However, it is crucial to be aware of the limitations and potential biases of AI systems and to exercise our own judgment when evaluating the information provided, as far as that is possible. Not all the information can be fact-checked or is easily verifiable.

In conclusion, while ChatGPT has the potential in simplifying hard tasks and making them tractable, we should not blindly believe everything the system tells us.

References

Admin. (2017). Fake News and Partisan Epistemology. Kennedy Institute of Ethics Journal.

Alfano, M., Carter, J. A., & Cheong, M. (2018). Technological Seduction and Self-Radicalization. Journal of the American Philosophical Association, 4(3), 298–322.

Brown, É. (2018). Propaganda, Misinformation, and the Epistemic Value of Democracy. Critical Review, 30(3–4), 194–218.

Coady, D., & Chase, J. G. (2018). The Routledge Handbook of Applied Epistemology.

Lohr, S. (2012). The Age of Big Data. The New York Times.

Lynch, M. and Hannah Gunn (2018). Google Epistemology. In D. Coady, & J.G. Chase (Eds.), The Routledge Handbook of Applied Epistemology. Routledge.

Lyotard, J. (1984). The Postmodern Condition: A Report on Knowledge. Poetics Today, 5(4), 886.

McIntyre, L. (2018). Post-Truth. In The MIT Press eBooks.

OpenAI. (2023). ChatGPT (May 24 version) [Large language model].

Waisbord, S. (2018). Truth is What Happens to News. Journalism Studies, 19(13), 1866–1878.