Controversial content on YouTube and small channels a perspective from early 2020

Youtube and its prosumers are in a relationship crisis. User-generated content on the site is marginalized, through policy changes the website has enacted.

The prosumers who helped build the website are increasingly frustrated with its governance. The way in which the site recently attempted to comply with COPPA regulations is the most recent example of this. But there is more than regulation influencing Youtube's policy. Its priorities have shifted. This is a phenomenon that has been widely covered by authors like van Dijck(2013). In 2006 Google bought the video-sharing site(Van Dijck, 2013). Ever since the site has changed. The questions remain, what has changed and why?

In this article, I will answer these questions. Primarily I must analyze what has changed on Youtube. What content is more or less prevalent and promoted. Thereafter I will analyze why Google has set this policy for Youtube. Another aspect I will cover is the example of COPPA. This is a recent exemplification of the phenomenon I will discuss.

Furthermore, an aspect that I would like to mention briefly is the fact that through the ability to link and comment Youtube has become morehypertextual(Miller,2012). Videos commonly refer to one another. An example of this is the myriad of collaborations. YouTubers often reference each other's channels and videos. This makes the space more internally connected. This is not an aspect I will go into more deeply, but a development that has taken place alongside the phenomena I will discuss.

Youtube and the content shift

Youtube uses an algorithm that changes to push certain content. This largely determines the popularity(Bishop,2018) of videos. The interaction between humans and algorithms makes Youtube part of the web 2.0 (Miller,2012) or the internet where users interact with machines or algorithms. This is necessary as the database of videos on the site is so large that one would not find any fitting videos without it(Miller,2012). The algorithms determine what they see.

Youtube can also demonetize and delete content. These are all measures to influence which content is posted and viewed on Youtube. That means Google, Youtube's owner can govern the site(Van Dijck,2013) in any way it wants.

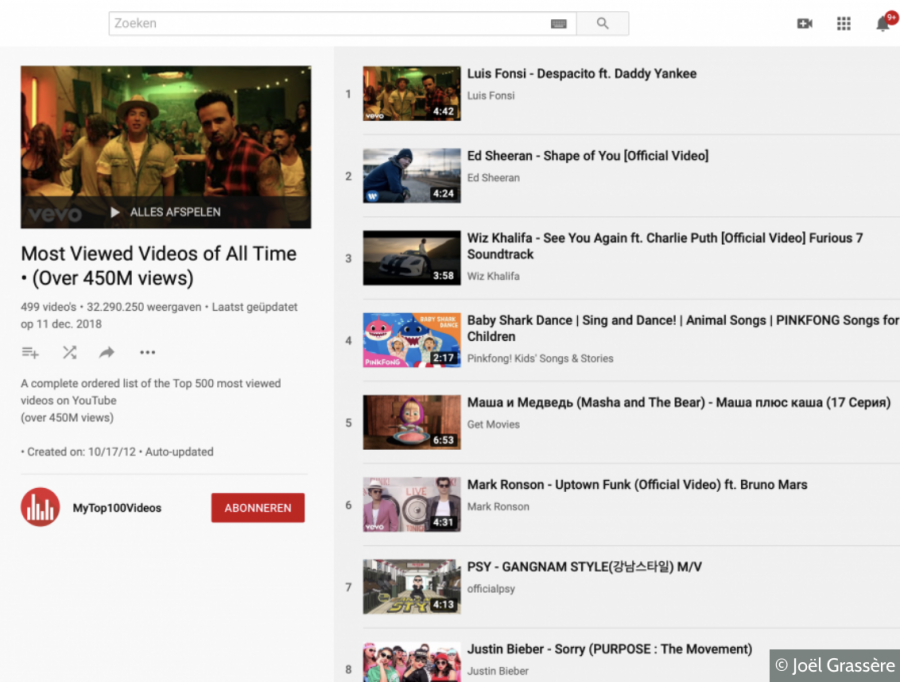

Most viewed video's in early 2020

To any long-time users of Youtube, a content shift is apparent. A way to illustrate this is through looking at the most viewed videos on it. Last year the most viewed videos were almost all music videos, by large companies. In 2010 some were user-generated and many were not pure entertainment, there was some talk show content. In 2005 many were user-generated, while some were not. They were also all rather short. All were some level of entertainment.

This shows development. Youtube is becoming more hierarchical(Van Dijck,2013). User-generated content(Van Dijck,2013) has always been a very large percentage of the content uploaded. However, there has always been professionally generated content(Van Dijck,2013).

Relatively speaking, this professional content has become more popular. While User-generated content has seen a dip in popularity(Kim,2012). This seems to be a consensus(Van Dijck,2013). Both authors and researchers José van Dijck(2013) and Jim Kim(2012) agree. An example from education will illustrate this. But first I must briefly explain the adpocalypse(Rading-Stanford,2018).

The adpocalypse(Rading-Stanford,2018) was the result of a move on behalf of Youtube to mark certain videos as unsuitable for advertising. Therefore these videos were demonetized. They would not receive advertising revenue. For the moment all the readers need to know is that this policy demonetized many channels that showed controversial content. It will be discussed in more depth at a later time. I will now show a case of the adpocalypse(Rading-Stanford,2018).

Recently, a set of history education channels ran by private individuals has been forced by the adpocalypse to start their own platform. See the video below for an announcement of this on one of these channels. They were all small channels that hosted controversial and somewhat educational content. Controversial educational content in this article refers to content over which disagreements can easily start, not fake news. For instance, discussing the history of the confederate states of America would fall under this definition.

It is a trend that small channels are often controversial. They need not please a large audience and can therefore be more controversial to large audiences. Their target group is more focused, which means they need not take in account the values of so many people. This is not to say that larger channels can not be controversial. Logan Paul's channel is an example of the. However, it does make sense that a smaller more focussed group can discuss things that are more controversial to a broader public.

This also shows in the content. Smaller channels can be focussed on one thing like the channels History Buffs and The Great War are. One focuses solely on reviewing historical movies and the other on World War one history. These are specific topics. However because they are specific and therefore only have viewer bases of around a million subscribers, at the time of writing, they can discuss controversial aspects of their topic. Controversial as in likely to start a thorough discussion. Examples of this are negative opinions of the movie Braveheart and the Armenian Genocide.

Furthermore, small channels usually have fewer sources of income, like expensive sponsors. This means through demonitization a larger part of their revenue sources dipped. Larger organizations are often funded by merchandise and network funds or big sponsors. They have more sources of income. So uninstitutionalized educational content is pushed away by the adpocalypse.

A lot of their content was demonetized. That was a larger blow to these smaller channels then to larger ones. More of their content was demonetized and the add revenue was a larger part of their income. Therefore they needed to migrate platforms. This shows that smaller producers and controversial content are taking a hit.

At the same time, institutionalized educators have joined Youtube. They were welcomed with open arms. This is shown by the advent of Youtube EDU. This platform is supposed to be a tool and platform for educators. They focus on universities and other institutes. These organizations are large and have large audiences. So they can be less controversial and demonetization has a smaller impact on them. Institutionalization seems to go hand in hand with reduced controversiality. There are more checks and balances. So during the adpocalyse institutionalized non-controversial content is helped while the opposite is demoted.

By analyzing these two examples, we see a trend. The most-watched videos have become more institutionalized. So has educational content. Unisitutionlized content seems to be more controversial. Youtube seems to favor this institutionalized non-controversial content now.

Youtube has shifted its priorities in favor of uncontroversial, institutionalized content.

This can be seen through the site's technological features. Youtube personalizes the website. The aforementioned algorithm makes a unique version of the website based on information about the user. This creates afilter bubble(Sunstein,2017) in which no contradicting information is shown. Another version of this can be shown through the local versions of Youtube. They all play to a certain country or audience. After a law was enacted in South-Korea about internet anonymity they got their own version. The site did not want to break the law. But this goes to a personal level too.

Through the filter bubble, there is little to no shared experience, which blocks out any new information that might be controversial to the consumer. This could be controversial as it goes against there established views. There is only information confirming one's point of view. Youtube does not only personalize a country's version to comply with laws. They personalize each version to make sure that we can not see any information that clashes with our established views. Controversy is individually and societally defined. So they adapt the content.

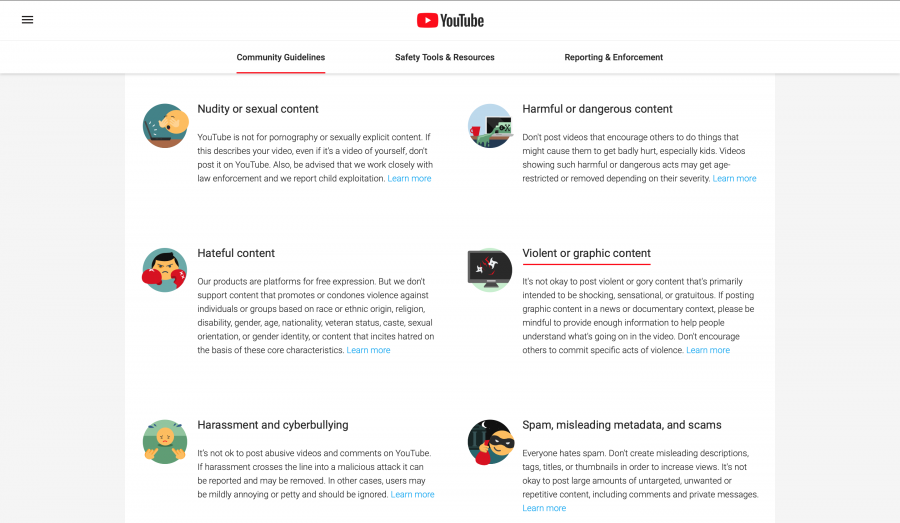

Youtube also has a policy of censorship. There can be no nudity or sexual content, harmful or dangerous content, hateful content, violence and many other things on it. They will remove this content. Some of this might be for moral reasons, but it is also all controversial. At least the most controversial type of content seems to be removed by Youtube. This confirms that it does not want any controversy.

This all shows that Youtube moderates(Gillespie,2018) and why this is so difficult. This a way of governing the platform(Van Dijck,2013). At the same time, that very fact makes Youtube a mediator rather than an intermediary(Van Dijck,2013) as they actively make connections. Youtube is not just a bridge, it is a dating site of sorts, a dating site between humans and videos. It suggests the connections. The site actively seeks to make connections between people and certain videos. An aspect of many internet media since the emergence of web 2.0 is this Hypermediatedness(Miller,2012).

Youtube moderates(Gillespie,2018) because they make these connections and determine what is and is not allowed on the site. The aforementioned policy of censorship, individual and national versions of the site are all forms of moderation. The difficulties of moderation are also showcased here. Youtube needs to operate in many countries and adapt to their laws, like in Korea. It needs to try and reflect its user base in the way it moderates, but this is hard to achieve with such a large and diverse group of users. Its policy of censorship thusly includes things many people find unethical or bad. Furthermore, the goals of the company compel them to moderate in a certain way(Gillespie,2018). This is not always in the interest of the user . This is exemplified in the site's usage of filter bubbles(Sunstein,2017).

Restrictions Youtube imposes

So all in all one can say that Youtube seems to be demoting user-generated and controversial content, through its moderation. Its algorithm is pushing professional and uncontroversial content. The effects of this can be seen through the personalized versions of the site. However, it can also be seen through most watched videos and emerging/decreasing content. Professionally generated content has been in an upsurge. User-generated content is in a downfall. The adpocalypse has helped solidify this. In the next paragraph, I will analyze why Youtube would enact this policy.

Advertising and content

I have noted that Youtube seems to be shifting from uninstitutionalized and controversial content to the opposite. Why would they do that? After all, the site was a success even before this shift happened.

This shift definitely is in line with Google's acquisition of the website. It has to be profitable for the company. The main way it does this is through targeted advertising(Hoyle,2000). Youtube shows adds before videos. These, not unlike video recommendations, are influenced by an algorithm and user data. That is why they call it targeted advertising. It is marketing to people they know might be interested. The design and interface changes Google has brought to Youtube promote this. To quote Van Dijck(2013)"Youtube's interface illustrates the power of combining UGC and PGC with search and advertising."

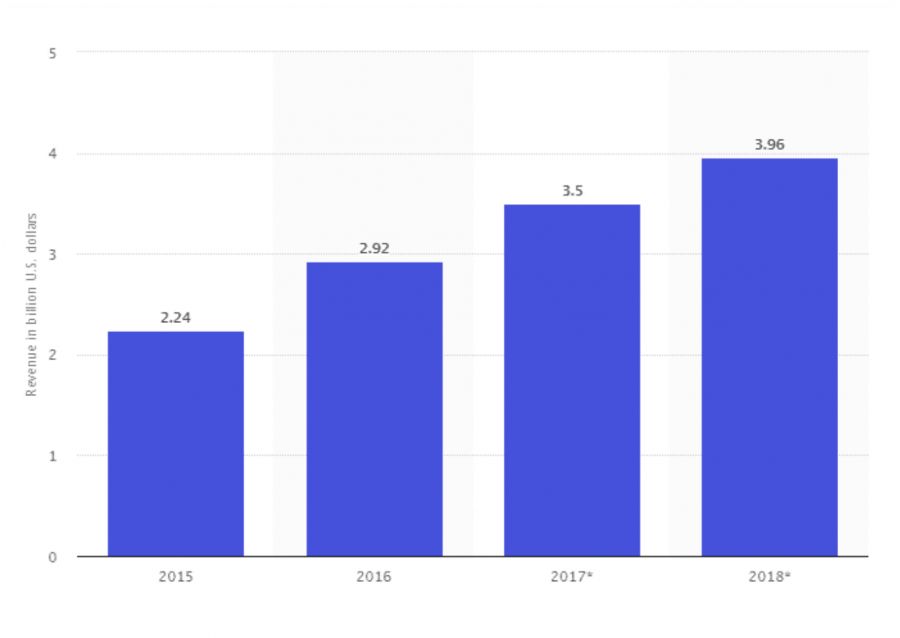

Youtube add revenue growth

But what does this have to do with the content shift? Well, there are two aspects to this. One is the algorithm used. As they use algorithms for both advertising and promoting videos they both target people. A more important aspect is that companies like to be associated with institutionalized safe videos. They do not want to be associated with controversy(Van Dijck,2013). This is because they want to maintain their image and its stability. An example of this happened last February.

Disney, Nestle, and other multinationals pulled ads from Youtube after a pedophilia ring was discovered. This, of course, is an extreme example. This was done partly for moral reasons. But it is true that controversiality is not favored by many companies(Bergen,2007). We must add this up with our previous conclusion. Controversial videos are often made by non-professional prosumers. Therefore if user-generated content is the most popular, controversy is more popular. This means fewer companies want to be associated with Youtube videos. That means there is less demand for Youtube's add space. Thus their revenue goes down. It is unsurprising that Youtube took measures to make sure all content was appropriate this summer. It was a reaction. One must see that Google is shifting its content by demonetizing and recommending differently. It wants to accommodate advertisers. Advertisers prefer having their adds play before institutionalized, noncontroversial videos.

Youtube must appeal to advertisers and thus breed out most controversy.

In short, Google is a private company that must make a profit. When the company acquired Youtube it had to as well. They did this by selling targeted advertising space. Companies do not want to be associated with controversy. They want to be associated with institutionalized well-known IPs and characters. So Google accommodates by demonetizing and not recommending controversial content. All the while pushing other videos.

Youtube and COPPA

Youtube is a multinational company. Therefore, they must adapt to local legislation. However, there is one piece of legislation that has impacted all users and creators on Youtube. I am of course speaking of COPPA. Or the Children's Online Privacy Protection Act of the United States of America. This law states that a website must protect the privacy of children. That means it puts conditions like parental consent on collecting information on a child, through the internet.

However, some YouTubers are not satisfied with the implementation of this rule. They are afraid of another adpocalypse. This is because Youtube has said it would demonetize videos that are "targeted at children". This makes some sense. Youtube will not be running targeted ads in front of these videos. After all, there are laws in many countries restricting child-oriented advertising . So this move makes sense. However, they recommend you cope with this by either adapting the channel or gaining other forms of revenue. There are a couple of problems with the way Youtube implemented this law. They show that Youtube has mainly furthered its agenda by implementing this new policy. This agenda is to promote large channels and demote smaller ones. The demonitization hits small creators harder than others.

The first one is the categories it makes. There are only children's and adult's content. Many YouTubers, like Micheal Kay, think they make family-oriented content. This is neither merely for children nor for adults. These videos could still legally receive advertising targeted to adults. Therefore the videos, would not have to be demonetized. Nor would the channel have to change. Many family-oriented channels though, afraid of being demonetized, have changed their content. For instance, Micheal Kay does not feature characters anymore. He expresses his thoughts in the video below.

This shows that small channels can easily get into big trouble because they are afraid of a large portion of their income might disappear. Institutionalized creators have more sources of income and thus a smaller risk.

Another issue with these measures were the alternatives Youtube gave. These are things large creators can do, like sponsorships. These options advertise to children, which shows that the enactment of this policy was not about helping children. It was about complying and furthering an agenda of institutionalization.

Coppa has furthered the divide between institutionalized content and its counterpart.

Concludingly, Coppa has not delivered on what it was intended to do on Youtube. It would have been more effective if a Category for Family-oriented content was made. This is because the criteria Youtube uses for Child oriented content can be interpreted broadly and applied to media for adults. Youtube mainly furthers its agenda, by complying with COPPA. This is because the demonitization hits the small channels hardest.

Conclusion

Youtube has seen a content shift from user-generated content to professionally generated content. This shift can be seen through the popularity of videos. User-generated content is often more controversial through its smaller audience. The site uses algorithms to achieve this. They do this to accommodate advertisers by breading out controversy. That in combination with the filter bubble leaves little room for common experience and controversy. These powers in governance and moderation make Youtube a mediator(Van Dijck,2013).

The way in which Youtube implemented COPPA has confirmed this. The policies it uses do not protect children as they say it should. Rather they filter out user-generated content because demonitization has a larger impact on their channels than on professional ones. This is all reflected in the way Youtube moderates. It is clear there can be a dichotomy between what is good for the company and the users. The site has implemented the aforementioned policies because of this. They help the company more than the creators and users. Advertisers are the focus of Youtube's moderation. This platform, like all others, is not neutral (Van Dijck,2013).

All in all, this will cause less user-generated and controversial content which makes Youtube a more hierarchical and homogenous space. Platforms "shape the shape of public discourse"(Gillespie,2018) and thus this change is significant. The shape of public discourse has become more individually homogenous.

References:

Abelson, H. Bulger, M. Liccardi, I. Mackay, W. Weitzner, D(2014).Can apps play by the COPPA Rules?. Twelfth Annual International Conference on Privacy, Security and Trust, Toronto, ON, Canada.

Almeida, V. Araúja, C. Doneda, D. Hartung, P. Magno, G. Meira , W.(2017, July 4).Characterizing videos, audience and advertising in Youtube channels for kids

Arthurs, J. Drakopoulou, S. Gandini, A. (2018)Researching YouTube. Journal of Research into New Media Technologies.

Bergen, L. Soontea, A(2007).ADVERTISER PRESSURE ON DAILY NEWSPAPERS: A Survey of Advertising Sales Executives.Journal of Advertising, Volume 36, issue 2.

Cuthrell, K. Jones, T.(2011).YouTube: Educational Potentials and Pitfalls. Interdisciplinary Journal of Practice, Theory, and Applied Research, Volume 28.

FTC. (2015, March 20).Complying with COPPA: Frequently Asked Questions.

Gillespie, T.(2018, March 20). Custodians of the internet: Platforms, content moderation, and the hidden decisions that shape social media.

Kim, J. (2012, January 31).The institutionalization of YouTube: From user-generated content to professionally generated content.

Van Dijck, J.(2013).The Culture of Connectivity: A Critical History of Social Media.Oxford University Press. Oxford, UK.

Veraa, L.(2019, March 4)I’m an Instagram Influencer, what do you do for a living?. Diggit Magazine.

Miller, V. (2011 February)Understanding Digital Culture. University of Kent.

Serrato, C. (2015, April 4)YouTube Kids – Luring Kids in, One App at a Time. Golden Gate University School of Law.

Sinke, A. (2018,September 26)YouTube's demonetization situation and the adpocalypse. Diggit Magazine.

Stanford, S.(2018)YouTube and the Adpocalypse: How Have The New YouTube Advertising Friendly Guidelines Shaped Creator Participation and Audience Engagement?. Lund University.

Sunstein, C.(2017) #Republic. Princeton University Press.41 William street, Princeton, New Jersey.

Veraa, L.(2019, March 4)I’m an Instagram Influencer, what do you do for a living?. Diggit Magazine.