The governmental fight and the concept of privacy

In their proposal, the European Parliament and the Council are arguing that "the EU is currently still failing to protect children from falling victim to child sexual abuse, and that the online dimension represents a particular challenge" (European Commission, 2022). Therefore, the European Union wants to oblige providers to automatically screen all private chats, messages, and emails for suspicious content – generically and indiscriminately. A lot is being said on the internet, which makes it clear that there are proponents and opponents of this proposal. This analysis dives deeper into their motivations from the perspective of privacy and how its meaning varies for different people.

A need for change

In the battle against sexual violence during childhood, including online sexual abuse against children, the European Parliament and the Council have proposed a regulation, dated 11 May 2022, laying down rules to prevent and combat child sexual abuse. They state that ‘"child sexual abuse material is a product of the physical sexual abuse of children. Its detection and reporting is necessary to prevent its production and dissemination, and a vital means to identify and assist its victims" (European Commission, 2022). Children have experienced a "significantly higher degree of unwanted approached online" since the pandemic. These approaches include incitement to child sexual abuse, which can lead to "sexual abuse and sexual exploitation of children and child sexual abuse materials". Sexual exploitation and abuse of children are criminalized across the European Union, and so are child sexual abusive materials. However, as the proposal states, "it is clear that the EU is currently still failing to protect children from falling victim to child sexual abuse, and that the online dimension represents a particular challenge" (European Commission, 2022).

On 24 March 2021, a comprehensive European Union Strategy on the Rights of the Child was proposed. It aims to protect children through multi-stakeholder cooperation and, with a focus on the online aspect, "invites companies to continue their efforts to detect, report and remove illegal content, including online child sexual abuse, from their platforms and services" (European Commission, 2022). Although a few ‘providers’ already voluntarily use technologies to detect, report and remove online child sexual abuse on their services, the majority do not. In addition, the quality of the reports is inconsistent. In the US, where US providers are obligated to report under US law when they become aware of child sexual abuse on their services, the National Centre for Missing and Exploited Children received "over 21 million reports in 2020, of which over 1 million related to EU member states".

Voluntary action has therefore been proven to be insufficient. As a result, a new proposal that "seeks to establish a clear and harmonized legal framework on preventing and combating online child sexual abuse" was formed. "It seeks to provide legal certainty to providers as to their responsibilities to assess and mitigate risks and, where necessary, to detect, report and remove such abuse on their services in a manner consistent with the fundamental rights laid down in the Charter and as general principles of EU law" (2022). In short, this means that providers have to comply with detection obligations, and are obligated to report and remove child sexual abuse material. This means that the European Union wants to oblige providers to automatically screen all private chats, messages, and emails for suspicious content – generically and indiscriminately.

The privacy issue

Not everyone agrees with the proposal. Not only individuals but also political parties and institutes around Europe are speaking up about the proposal. The Dutch Piratenpartij (The Pirate Party) for example called it "het einde van de privacy van digitale communicatie" (2022) (the end of the privacy of digital communication). Gesellschaft für Freiheitsrechte (Society for Liberties) argues: "Chatkontrolle: Mit grundrechten unvereinbar" (2022) (chatcontrol, incompatible with fundamental rights). The issue is also being discussed on news platforms, where people often speak out against the proposal. Among other things, privacy and democracy are called into question.

"Digital surveillance is the process by which some form of human activity is analyzed by a computer according to some specified rule" (Lessig, 2006, p. 209). The changes in the process over the years can be called securitization (Pallito & Heyman, 2008). In his book, Lessig (2006) mentions Brandeis’s definition of privacy: "the right to be left alone’. Lessig then explains that when it comes to the perspective of the law, ‘it is the set of legal restrictions on the power of others to invade a protected space". This proposal is about regulation and legislation and involves an individual's privacy. It is therefore of interest to lay the obtained data against the concept of privacy. Since the data was extracted from the news platform NU.nl, the research question is as follows: how do conceptions of privacy differ for individuals reacting on the news platform NU.nl, and how does this influence if they are for or against the proposal?

Relevance

In the current times, there is a lot to do around the concept of privacy. People are more aware of their (lack of) privacy than ever before. Data shows that the group that is against the ruling is much larger than the group that is in favor of it, while the proposal is there with the intention of combating child abuse. It is of important to learn more about the motivation to oppose such a proposal. In addition, it is instructive to understand why people are in favor of this ruling. This study, therefore, contributes to a more detailed understanding of how the concept of privacy differs when it comes to the proposal, which leads to insights that can then lead to better policy on this issue.

Privacy, securitization, and surveillance creep

Privacy in a digital age

As stated earlier, the concept of privacy can be defined as the right to be left alone. Lessig (2006) argues that privacy can be compromised and distinguishes two dimensions, namely the part of an individual's life that can be monitored, and a life that can be searched. The difference between this distinction is that the former is stored and therefore searchable, thus findable, and the latter mainly concerns what is observed, but is soon forgotten. Depending on the technology in each, the two dimensions can interact. As an example, Lessig (2006) explains that monitoring can produce a record in the form of memory. This is searchable, but that would cost the government a huge amount of money and time. Furthermore, it is complicated to check memory for truth. Therefore, it is not likely to happen.

The balance changed radically due to digital technologies. The Internet, online searches (for example Google keeps its search results), e-mail, voice mail, voice (in the form of telephone conversations), video, and even body parts (DNA) contribute to the monitoring of more behavior. In addition, these technologies now gather data in a manner that makes it searchable (Lessig, 2006). In this way, data is accessible. Behavior can be tracked and events reconstructed.

As argued earlier, Lessig (2006, p. 209) defines digital surveillance as "the process by which some form of human activity is analyzed by a computer according to some specified rule". The rule defines the specific search query. The crucial feature is that it is a computer-based search, that is followed up by a human. Lessig found two main responses, namely pro-privacy people arguing that there is no difference between a computer watching data, or the owner of that computer. In both cases, a legitimate and reasonable expectation of privacy has been breached, of which the law should protect. The pro-privacy people argued that there is a significant difference since it is a machine that is processing the data and "machines don’t gossip. They’re just logic machines that act based upon conditions" (Lessig, 2006, p. 210).

The ‘left alone’ of Lessig's 'the right to be left alone', however, would be challenged when all our communication is tracked. There are multiple examples where surveillance was used for one purpose but also was used for other purposes. An open, transparent, and verifiable system in which only the evidence for the crime that was searched for may be used leaves room for discussion. However, the conception of privacy in the light of a search is an assault on the dignity of the individual. Even when the individual is not charged or the search is not noticed. Only when the state has a good reason to search before it searches, dignity interest may be matched. "A search without justification harms your dignity whether it interferes with your life or not" (Lessig, 2006, p. 211).

Securitization and surveillance creep

Pallitto and Heyman (2008) define the concept of securitization as follows: "the spreading of national security techniques across a wide variety of issue domains". The term, however, was coined by Buzan, Waever, and de Wilde ten years before. In their book, they argue that securitization occurs when an issue "is presented as an existential threat, requiring emergency measures and justifying actions outside the normal bounds of political procedure" (Buzan et al., 1998, p.23-24). The criteria mean that there is securitization when it is formed by the intersubjective establishment of an existential threat with a saliency sufficient to have substantial political effects. Thus, to speak of successful securitization, three components must be met: existential threats, emergency action, and effects on interunit relations by breaking free of rules.

In the case of the proposal, the issue that is pointed out describes that the European Union fails to protect children from falling victim to child sexual abuse. According to the European Commission (2022), the online dimension represents a particular challenge. The question in means of securitization is: "when does an argument with this particular rhetorical and semiotic structure achieve sufficient effect to make an audience tolerate violations of rules that would otherwise have to be obeyed?" (Buzan et al., 1998, p.25). According to the Commission, the issue poses an existential threat, making it a priority to address. For this reason, the European Union wants to oblige providers to automatically screen all private chats, messages, and emails for suspicious content – generically and indiscriminately. An act that violates rules in terms of privacy, that otherwise would have been obeyed.

The concept of surveillance creep is derived from the concept of function creep. Koops (2021, p.29-30) describes the concept of function creep as "the expansion of a system or technology beyond its original purposes", or, "a gradual expansion of the functionality of some system or technology beyond what it was originally created for". Collins Dictionary extends the definition: "the gradual widening of the use of a technology or system beyond the purpose for which it was originally intended, esp when this leads to potential invasion of privacy".

It can be argued that the ruling as it is proposed is an easy subject to surveillance creep. As the European Commission states, the proposal has a goal to oblige providers to automatically screen all private chats, messages, and emails for suspicious content – generically and indiscriminately. However, when this law comes into effect, there will be weak spots. The generic and indiscriminate cannot be checked. One must rely on the executor. Furthermore, it is never certain that the data will not be used for other purposes. After all, it is not an open, transparent, and verifiable system as described by Lessig (2006, p.211).

Methodology

The analysis was conducted in a mixed-method manner. First, a literature review was done on the concepts of securitization, privacy, and surveillance creep. To gain an insight into the different views on the proposal, reactions to news items posted on the news platform NU.nl were analyzed. NU.nl has a response platform that makes it possible to respond to news articles. In this way, the reader can participate discussions and express their opinion.

There were three relevant news articles posted on NU.nl in response to the proposal of 11 May 2022 which had 583, 146, and 339 comments respectively, with a total of 1,068 (NU.nl, 2022a; NU.nl, 2022b; NU.nl, 2022c). These responses were manually extracted and thereafter subjected to discourse analysis. By manually retrieving the context of the responses, these were subdivided into one of three categories, namely 'against the proposal', 'for the proposal', and 'n/a'. Additionally, the argumentation of the commenter was sought in the context. The analysis was done in Dutch. Hereafter, the responses and the context were manually translated into English.

Of the 1,068 comments, 1,014 were analyzed. 54 comments were not visible since they were written by accounts that have since been deleted or blocked. To make a distinction between the comments, they were categorized into 'for', 'against', and 'not applicable'. A comment was seen as 'for' or 'against' if an opinion could clearly be traced. A comment was considered 'not applicable' if no opinion could be traced or if one went off-topic. The motifs were then analyzed manually. To answer the research question, the findings were then held against the concepts of privacy, securitization, and surveillance creep.

Findings

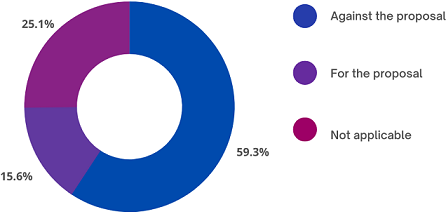

The findings show that of the 1,014 comments, 601 comments were against the proposal as presented in its current form. This equals a percentage of 59.3%. 255 comments, which equals 25.1%, were not applicable. Those comments contained no clear opinion and sometimes did not talk about the topic at all. 158 comments, which equals 15.6%, contained a clear, positive opinion regarding the proposal (Figure 1.).

Figure 1: Categorized comments

In the following section, the three main points of the comments against the proposal are displayed. Thereafter, the argumentations of the comments for the proposal are described as well.

Against the proposal

Three main points emerged from the comments that were negative regarding the proposal. A recurring topic is the principle of proportionality. Within European law, the principle of proportionality plays a role in the actions of the European Union. Such action must not go beyond what is necessary to achieve the objectives of the treaties of the European Union. Actions must be appropriate and necessary, and there must be no less restrictive alternative that can achieve the same end (EuropaNu, n.d.).

Those who oppose the proposal believe that the end does not justify the means. They argue that the citizen is the victim, while the criminal easily circumvents such systems. Furthermore, they believe that due to ‘false positive’ hits, a lot of time has to be invested in manual control and there is no time left to catch the real criminals. In addition, confidentiality cannot be guaranteed. With this in mind, giving up privacy does not outweigh what will be achieved with it.

Another recurring topic is the presumption of innocence. Article 6 paragraph 2 of the European Convention on Human Rights states that "anyone charged with a criminal offense shall be presumed innocent until proven guilty in court". However, the findings show that many, when entering this rule, feel that they are identified as guilty by default, and that the screening of their devices must prove their innocence.

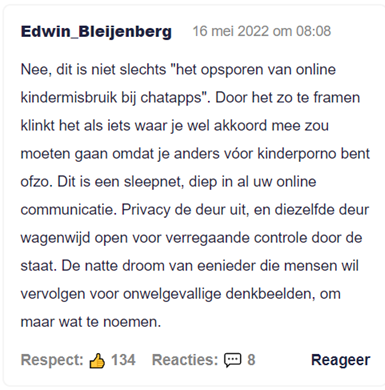

'This is a dragnet, deep in all your online communication. Privacy out the door, and that same door wide open to deep state control'

Finally, it is striking that it is believed that child abuse is used as an excuse to ensure that the proposal is accepted. After all, child abuse is a sensitive subject. It is regularly stated that in the current regulation child abuse and child pornography are targeted, but there are concerns that the data will be used for other purposes at a later stage. As the user shown in figure 2 for example mentions, "framing it like that makes it sound like something you'd have to agree to because otherwise you're in favor of child porn or something. This is a dragnet, deep in all your online communication. Privacy out the door, and that same door wide open to deep state control".

Figure 2: An example of a comment from a user that is against the proposal

It is argued that once the law has been passed, it cannot simply be reversed. Even if Europe has good intentions at the moment, the question is what it will be like when others come to power.

For the proposal

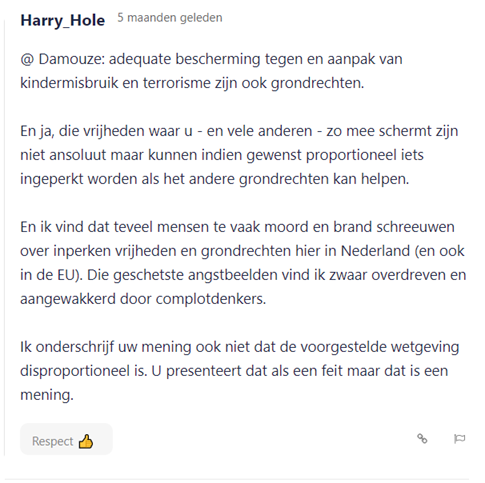

The results show a twofold picture among those who have expressed support for the proposal. The argument regarding the proportionality principle is suppressed several times. This is shown for example by the user in figure 3: "And I think too many people shout bloody murder about curtailing freedoms and fundamental rights here in the Netherlands (and also in the EU). I find the sketched fear highly exaggerated and fueled by conspiracy theorists. Nor do I agree with your view that the proposed legislation is disproportionate. You present that as fact but that is an opinion".

Figure 3: An example of a comment from a user that is for the proposal

Another argument in favor of the proposal is that the end does justify the means. It is stated that people are not at all interested in messages or photos unless there is wrong material found on the device. There is nothing wrong with that, say the proponents.

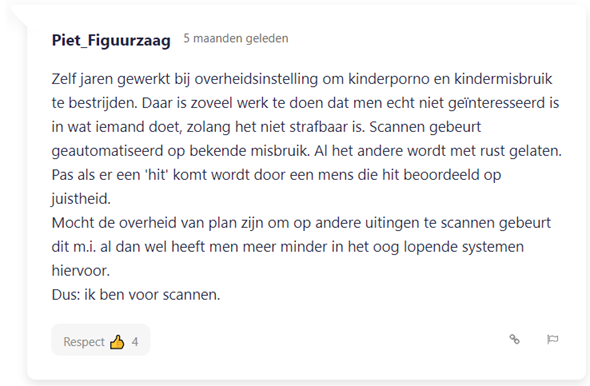

'There is so much work to be done there that people are really not interested in what someone is doing, as long as it is not punishable.'

The last frequently recurring argument is simply that protecting children is more important than one's own privacy. It is striking that some of the proponents have had to deal with child abuse (indirectly) or indicate that they have children themselves and are therefore alert. For example, the user in figure 4 says: "I worked for years at a government agency to combat child pornography and child abuse. There is so much work to be done there that people are really not interested in what someone is doing, as long as it is not punishable".

Figure 4: An example of a user that has dealt with child abuse due to its occupation

The battle of privacy

The data showed that the group of opponents regarding the proposal is by far the largest with 59.3%. There is great resistance to the invasion of privacy. People have the feeling that 'the right to be left alone' (Lessig, 2006, p.210) is at stake. One of the arguments that came forth is that people do not want to give up their privacy because the ends do not justify the means. Criminals can easily bypass the system. In addition, 100% of the population has to give up their privacy against a small percentage who are involved in child pornography. Furthermore, believe the presumption of innocence is being violated.

The issue that follows is the concept of securitization. When answering the question "when does an argument with this particular rhetorical and semiotic structure achieve sufficient effect to make an audience tolerate violations of rules that would otherwise have to be obeyed?" (Buzan et al., 1998, p.25), the findings show that the opponents of the proposal are convinced that the three criteria ‘existential threats, emergency action, and effects on interunit relations by breaking free of rules’ are not met. The need to proceed with the measure of screening devices is insufficiently present among the opponents. They argue that the measure does not work and that 'child abuse' is used as an excuse to collect data.

This leads to the final point, namely the argument that this law is being proposed to ensure that data can later be collected for other purposes. As Lessig (2006, p. 211) argued, there is only room for discussion when there is an open, transparent, and verifiable system. Since the proposal does not contain such a system, it is seen as a system prone to being subject to surveillance creep, at which the technology is expanded beyond its original purposes (Koops, 2021, p. 29-30). These arguments lead to the conclusion that the search as proposed in the ruling of the European Commission (2022) is therefore not sufficiently justified, and thus violates the dignity of the individual (Lessig, 2006, p. 211).

This is different for the ones who are in favor of the proposal (15.6%). The results show that the arguments of this group are the opposite of those of the group of opponents. The three components of successful securitization are met in their case. They believe emergency action is necessary to combat existential threats, namely child abuse. Those who are in favor of the proposal consider protecting and rescuing children more important than the right to be left alone (Lessig, 2006).

This answers the question of how the concepts of privacy differ between the opponents and proponents of the proposal: The opponents see the right to be left alone as an ultimate good that must be protected from the government, while proponents are shifting priorities when it comes to fighting child pornography and abuse. "a search without justification harms your dignity whether it interferes with your life or not" (Lessig, 2006, p.211). The difference is that for the opponents it is not justified and therefore harms their dignity, while for the proponents the search is justified and thus their dignity remains intact.

References:

Buzan, B., & Waever, O., & Wilde, de, J. (1998). SECURITY – A New Framework for Analysis. Lynne Rienne Publishers, Inc. Boulder, Colorado

European Commission (11 May, 2022). Proposal for a REGULATION OF THE EUROPEAN PARLIAMENT AND OF THE COUNCIL laying down rules to prevent and combat child sexual abuse. Retrieved September 30, 2022

Europa Nu (n.d.) Proportionaliteitsbeginsel. Europa Nu. Retrieved October 25, 2022

Function creep. (n.d.). In Collins Dictionary. Retrieved October 15, 2022.

Koops, B. J. (2021) The concept of function creep. Law, Innovation and Technology, 13:1, 29-56, DOI: 10.1080/17579961.2021.1898299

Lessig, L. (2006). CODE: Version 2.0. Basic Books.

Pallitro, R., & Heyman, J. (2008). Theorizing cross-border mobility: Surveillance, security and identity. Surveillance & Society, 5(3).

NU.nl. (2022a, May 11). Techbedrijven moeten van Brussel actief online kindermisbruik gaan opsporen. NU.nl.

NU.nl. (2022b, May 16). Waarom er kritiek is op het opsporen van online kindermisbruik bij chatapps. NU.nl.

NU.nl. (2022c, May 23). Europa werkt aan strengere regels tegen kinderporno: 3 vragen en antwoorden. NU.nl.

wetten.nl - Regeling - Verdrag tot bescherming van de rechten van de mens en de fundamentele vrijheden - BWBV0001000. (2021, August 1).