Why Facebook? Activism, censorship, and democracy

In this article, my aim is to discuss Facebook’s role as surveillor and censor of users’ posts, and one group of activists’ construction of shared attitudes regarding these moderation practices. Below, I am particularly interested in how this group of people interact around a certain set of Facebook’s “Community Standards” practices and policies in order to make meaning of them, make both political and social use of them, and be critical of them.

Research context

I spent late 2016 through 2019 as an active member of two intertwined activist organizations in the United States named Pantsuit Republic Texas (PSR) and Pantsuit Republic Houston (PSRH). My involvement in both began not long after Donald Trump’s election as the country’s 45th President. I held the position of Secretary of the PSR leadership board from August 2017 to August 2019, and this two year period was the time frame of my ethnographic and largely Facebook-centric data collection for this research. From among my eight total research participants, those mentioned in this article are Joseph, Samantha, and Lucy. For more information on the participants and the data collection process see Zentz (2021).

These activists were all thoroughly aware of and regularly critical of the problems that the Facebook platform presented, from privacy and algorithm issues (Oremus et al. 2021, Treré 2019) to “keyboard warriors” and “slacktivists” (Dennis 2019), and to unequal censorship, according to Facebook’s “Community Standards” regarding hate speech, of posts made by people of varying races, ethnicities and genders. My approach to the group’s collective “battle” against these policies below will consist of analysis of how the group interacted on Facebook about Facebook; that is, how these individual group members took individual and collective stances toward their own texts, toward Facebook, toward each other(’s texts), and toward notions of free speech and inequality that informed Facebook’s moderation - or censorship - policies and activities.

In order to analyze the group’s interactions regarding Facebook moderation, I have generally relied on frameworks of stance and group identity practices (see Zentz 2021). Below, I will proceed to analyze the participants’ behaviors specifically as they relate to Facebook’s moderation practices, focusing primarily on one sequence of events in which Joseph was repeatedly put in “Facebook jail” (his account was suspended for varying lengths of time) for writing posts containing the phrases “Men are savages” and “Men are trash”. In closing, I will relate these activists’ collective criticisms of the platform to broader discussions about hate speech, equity, and equality.

Everything is Moderation

I draw from several authors, including Gillespie (2018) and Bucher (2021), to define moderation as the governance mechanisms in social media spaces that aim to keep user communication in line with certain norms that are ultimately decided by corporate leaders (see also Grimmelmann 2015, Myers-West 2018, Roberts 2014). In Facebook’s case, there are top-down corporate standards for regulating speech on the platform, and these work in concert with a reliance on the users themselves, who are charged with “flagging” content - reporting it to “the system” for review - that they deem unsuitable for consumption. Facebook calls this set of practices its “Community Standards”.

The text of Facebook’s Community Standards has been public facing since 2018, following a leak of moderator training documents in 2017. Since then, the Community Standards text has been posted and regularly updated on Facebook’s website, consisting of several different pages categorized under five separate topic areas: “Violence and Criminal Behavior”; “Safety”; “Objectionable Content”; “Integrity and Authenticity”; and “Respecting Intellectual Property”. Facebook’s “Hate Speech” policy statement falls under the category of Objectionable Content. The other subcategories that appear alongside it are “Violent and Graphic Content”; “Adult Nudity and Sexual Activity”; and “Sexual Solicitation”. The current policy, which I accessed November 12, 2021, begins with a definition of hate speech that is based on ideas of “protected characteristics” and “direct attacks”:

"We define hate speech as a direct attack against people — rather than concepts or institutions — on the basis of what we call protected characteristics: race, ethnicity, national origin, disability, religious affiliation, caste, sexual orientation, sex, gender identity and serious disease. We define attacks as violent or dehumanizing speech, harmful stereotypes, statements of inferiority, expressions of contempt, disgust or dismissal, cursing and calls for exclusion or segregation. We also prohibit the use of harmful stereotypes, which we define as dehumanizing comparisons that have historically been used to attack, intimidate, or exclude specific groups, and that are often linked with offline violence. We consider age a protected characteristic when referenced along with another protected characteristic. We also protect refugees, migrants, immigrants and asylum seekers from the most severe attacks, though we do allow commentary and criticism of immigration policies. Similarly, we provide some protections for characteristics like occupation, when they’re referenced along with a protected characteristic. Sometimes, based on local nuance, we consider certain words or phrases as code words for PC groups.

We recognize that people sometimes share content that includes someone else’s hate speech to condemn it or raise awareness. In other cases, speech that might otherwise violate our standards can be used self-referentially or in an empowering way. Our policies are designed to allow room for these types of speech, but we require people to clearly indicate their intent. If the intention is unclear, we may remove content."

This policy text compares to the version that would have been in effect when Joseph was sent to “Facebook jail”, as we will see in the next section. The Hate Speech policy document that was in effect when Joseph’s account was suspended was dated August 31, 2018 and was a much more sparse version, starting out:

"We define hate speech as a direct attack on people based on what we call protected characteristics — race, ethnicity, national origin, religious affiliation, caste, sexual orientation, sex, gender, gender identity, and serious disability or disease. We also provide some protections for immigration status. We define attack as violent or dehumanizing speech, statements of inferiority, and calls for exclusion or segregation. Attacks are separated into three tiers of severity, described below."

The policy that I believe Joseph’s statements would have fallen under was in the Tier 2 category, described as follows:

"Tier 2 attacks, which target a person or group of people who share any of the above-listed characteristics, where attack is defined as

- Statements of inferiority or an image implying a person's or a group's physical, mental, or moral deficiency

- Physical (including but not limited to “deformed,” “undeveloped,” “hideous,” “ugly”)

- Mental (including but not limited to “retarded,” “cretin,” “low IQ,” “stupid,” “idiot”)

- Moral (including but not limited to “slutty,” “fraud,” “cheap,” “free riders”)

- Expressions of contempt or their visual equivalent, including (but not limited to)

- "I hate"

- "I don't like"

- "X are the worst"

- Expressions of disgust or their visual equivalent, including (but not limited to)

- "Gross"

- "Vile"

- "Disgusting"

- Cursing at a person or group of people who share protected characteristics."

In particular, it seems that his utterance fell under the second bullet point, “Expressions of contempt or their visual equivalent, including (but not limited to): “I hate”; “I don’t like”; and “X are the worst”. Of course, one would not know that after getting banned because there is no explanation for why one is “jailed” when they receive their notification, as we will also see below (Image 1). Without further ado, then, let us proceed to Joseph’s series of bans in late 2018, and this group’s interactions regarding these bans.

Group stancetaking toward Facebook’s Hate Speech moderation practices

Calling out Facebook’s poor judgment in the moderation of hate speech was a “pastime” for Joseph and his activist friends. It had become somewhat of a game - albeit an ultimately serious one - wherein Joseph kept challenging Facebook’s moderation practices and policies regarding “protected categories” (see above), upon which he was repeatedly placed in “Facebook jail” for violating those policies. Subsequent to his bans, he and his group of friends would comment on Facebook’s censorship problems and inconsistencies, as well as taking bets on when Joseph would get put back into jail for another violation.

In September 2018, many of us got a new Friend request from Joseph, under a different profile name. As the group were quite vigilant about fake accounts, Joseph took it upon himself to clarify, by posting from his new account to the wall of his primary - but now frozen - account. He shared a screenshot of the notification he had received from Facebook, that his primary account was locked for three days “because you previously posted something that didn’t follow our community standards.” (Image 1) They provided a citation of his violating statement below this announcement, which was: “Men are savages.” According to the moderation guidelines described above, “men” fall under a protected category because the label falls into the category of gender and sex characteristics. For his post to have been in violation of Facebook’s community standards, either an algorithm flagged this token or someone had reported Joseph’s post to the Facebook moderators (Joseph was convinced that he had a nefarious Friend who was flagging his comments), and then a moderator must have reviewed it and decided that such a post merited punishment. Joseph wrote above his screenshot of the account suspension notification: “Because I keep getting PMs that I can’t reply to: yes, this is Joseph. No I’m not a hacker. // Yes, FB sucks.”

Image 1: Men are savages (Joseph, Personal, September 20, 2018)

This was only the beginning of Joseph’s challenge to this moderation rule, and as he continued to do so, his Facebook bans got longer and longer, and variations on the phrase “Men are trash”, as it evolved into, became an oft-repeated joke among them all. Pointing out the seriousness of this game, however, Lucy commented on the issue in late 2018 while Joseph was “in Facebook jail” for a third time, which had earned him a nearly month-long ban: “Facebook needs to stop censoring activists… men aren’t a protected group since they reap the benefits of toxic masculinity. #Markdoyouhearme?” (Lucy, Personal, December 6, 2018). To which Samantha responded: “He hears you but doesn’t care because he’s a man and he’s fragile. He created the platform because of his fragile, toxic masculinity ego.” With these statements, Lucy and Samantha took collaborative stances toward each other - Samantha supported and reinforced Lucy’s message by pointing out that the whole reason Facebook ever got started was because of Zuckerberg’s “toxic masculinity”.

Depending on contextual and individual circumstances, the exact same word can be innocuous, informative, or dangerous. To insert words and collocational data into algorithms in order to screen for “hate speech” can be seriously problematic

These collaborative stances coalesced around a message that Facebook was not honoring principles of free speech when it came to activists pointing out inequalities both in society and in Facebook’s moderation practices. This was thus a moral political stance (Zentz 2021) toward Facebook’s assessment of what constituted hate speech: was it just related to decontextualized and unrealistically equalized demographic categories of group membership? This seemed to be (at least one aspect of) Facebook’s stance on the matter. Were the policies implemented in favor of men, and particularly White men? This seemed, according to these activists, to be another aspect of Facebook’s stance on the matter. Or was it a clear attack, threatening imminent danger, on an already marginalized group? The latter needed lots of contextual information, and it did not seem to them like Facebook was considering any of it.

"This was clearly a serious topic of conversation to be had, then, and Joseph engaged with the matter much more in this tone, specifically during his second ban, when he authored a quite serious blog post on Medium, which he subsequently shared with his Friends on Facebook. In his post, he shared several screenshots of posts of his that had been banned, and posts by others that had not been banned but were, from his point of view, morally atrocious and deeply bigoted. He wrote in the Medium post:

"While my comment was meant to be funny, in the case of “Men are Savages” I was discussing the tendency for some men to not wash their hands after going to the bathroom. Or thought provoking, in the case of “Men are Trash”, while addressing toxic masculinity and the harassment of women. What they aren’t, is hate speech. Men suffer from enormous privilege in our society, we are not a disenfranchised group, we are the group doing the disenfranchising.

Now Facebook is free to disagree with my definitions, but when they allow pages, that portray African Americans in horrible caricature, or make disparaging comments about other religions, they seem to find that speech to “not be against their community standards”. [he posted examples of both of these, which had not been removed from the platform]

Not against their community standards? What community are they talking about? The rich and diverse community of the US? One that thrives on different people, from different backgrounds and religions, contributing to society? One that Facebook utilizes to hire talent to do it’s programming.

No, they are talking about an “white men” only community. A misogynistic, bigoted community that supports the unbridled speech of hate mongers, while silencing the voices of marginalized groups, for profit." (Joseph, Medium post, November 20, 2018)

In his commentary, Joseph made clear his opinion that Facebook was operating not just under false equivalencies based on the notion of “protected categories”, but that the organization’s leadership was entirely hypocritical, deeming it appropriate to ban a phrase like “Men are trash” but not to ban depictions of Black people as primitive and enslaved, or Muslim women as akin to “dead meat”.

A week before Joseph’s release from his third and nearly month-long ban, Lucy returned to the “game” frame of the protest, teasingly posting a long series of gifs related to the topic of “freedom” or being freed from jail/confinement (Image 2). I reproduce just the first two here:

Image 2: Free from FB jail (Lucy, Personal, December 22, 2018)

A week later, as soon as Joseph was “freed” (that is, his account was unblocked so that he could use it again), Lucy wrote, “Joseph is BACK!!” (Lucy, Personal, December 29, 2018). In the first comment below Lucy’s post, Samantha wrote: “So, who’s starting the ‘Joseph got banned again’ pool? I’ll take January 3rd.” After several sardonic posts from various Friends, Joseph replied: “Your faith in me is astounding. lol” A short gif thread ensued to continue the game.

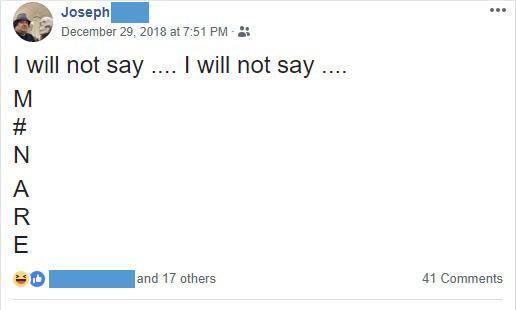

It was indeed that very day that Joseph went right back at it, writing the phrase in a post written from top to bottom, where each letter occupied a new line, presumably to “trick” the flagging system (Image 3).

Image 3: I will not say (Joseph, Personal, December 29, 2018)

By this time, a large group of friends were in on the joke. Among the 41 comments posted below his post in the response thread, most were gifs of images of trashcans and dumpsters (that is, “trash”).

Interactions such as those above can be understood as moments of “collaborative play” (Coates 2007, Zentz 2021) in which the group took stances toward each other’s comments that construed them as a “with”. The “with” that they were constructing, I argue, was a shared moral political stance regarding false equivalencies (at best) on Facebook’s part that made an attack on “men” equal to an attack on “women”, or “Black people”, and so on. As the group took collaborative stances toward each other in order to convey this larger moral political stance, they built on each other’s humorous comments and gifs, frequently “laughing in the same way” (Jefferson et al. 1978 as cited in Coates 2007, p. 44). Their shared laughter, though, as I have demonstrated, sat atop a quite serious message in which they made a claim that Facebook’s censorship priorities were insensitively decontextualized - excessively ignorant of the power dynamics at play in insulting “men” as opposed to other minoritized identity characteristics, and also excessively ignorant of the critical - but clearly not dangerous - intent of Joseph’s statements and their uptake by his interlocutors.

When is Hate Speech Dangerous Speech?

On the website of The Dangerous Speech Project, the authors lay out a quite useful distinction between static, definition-based, often false equivalencies as the one shown above, in comparison to a specific focus on speech that is designed to and/or has the actual effect of inciting physical and material violence on a group of people. The specific definition that the authors provide is:

“Any form of expression (e.g. speech, text, or images) that can increase the risk that its audience will condone or commit violence against members of another group.” (italics in original, Benesch et al. 2021)

They go on to clarify that dangerous speech is “aimed at groups”, it “promotes fear”, it is “often false”, and it “harms indirectly”, meaning that it is constituted by a set of speech acts that motivates its audience “to think and act against members of the group in question.”

Harking back to Speech Act Theory, in particular the notion of perlocutionary force or an understanding of how audiences take up an utterance, Benesch et al. (2021) write that depending on contextual and individual circumstances, the exact same word can be innocuous, informative, or dangerous. Thus, to insert word and collocational data into algorithms in order to screen for “hate speech” can be seriously problematic, flagging tons of “false positives”, or utterances where registered words or phrases are caught even though they are contextually benign, and also tons of “false negatives”, where much dangerous speech is performed without any registered words whatsoever (see also Gillespie 2018).

Facebook moderation policy makers have led their moderation teams to decontextualize “hate speech” in inappropriate ways

Joseph’s point in leading a collective performance that revolved around his getting banned over and over was, in so many words, to point out a false positive, and an egregious one considering the examples of posts he provided that had not been removed. Facebook was flagging the phrase “Men are trash” when first, it was benign speech, written originally in jest at disgust with men who do not wash their hands after going to the bathroom, and later it was written in jest, but with a metadiscursive point to be made regarding Facebook’s incoherent moderation policies. Joseph and his peers intended to point out that “men” did not even constitute a precarious group since they benefit from a significant amount of privilege in comparison to women and non-cisgender people, both in the US and beyond. Joseph’s point, then, was to point out that Facebook had a moderation problem, which was related to the notion that an utterance of “hate” toward a privileged class of people cannot be viewed and should not be treated as a threatening gesture in the way that declarations of hatred toward marginalized classes of people are. Clearly, under Joseph’s interpretation of his utterances and the perlocutionary forces that they had on his Friends including myself, such an utterance was very far away from qualifying as dangerous speech in any sense of the word. His counterexamples, on the other hand, provided statements that were built on historical and institutionalized prejudices, marginalizations, and atrocities and were thus much more dangerous to allow to circulate.

Joseph’s point thus brings up several problems with Facebook’s post facto, algorithmically based, and largely decontextualized policies regarding hate speech. First, Facebook moderation policy makers have led their moderation teams to decontextualize “hate speech” in inappropriate ways, equating utterances of disdain toward privileged groups of people as in principle the exact same thing as utterances of disdain toward marginalized people. Secondly, they have placed this already incorrect formula into an automatized flagging mechanism that cannot understand meaning and context; it of course can only understand what has been entered into it as a set of code-based elements that have been identified by their programmers as “hate speech”. Beyond this, leadership have trained their content moderators to simply think like the algorithms, it seems, such that an AI flagging of a sarcastically written “Men are trash” or “Men are savages” - which one would think would then go to a moderator to be read in context and thus given a “stet” pass - instead is simply read by a moderator in the exact same spirit as the AI itself (did not) interpret(ed) it. Likewise, in accordance with the examples given above, moderators would be taught to treat “Black children are trash” as not censorable while “Men are trash” would be so.

More free speech? When and for whom?

David Graeber (2004, p. 432) points out that:

“As any political anthropologist can tell you, the most important form of political power is not the power to win a contest, but the power to define the rules of the game; not the power to win an argument, but the power to define what the argument is about.”

The power dynamics at play when an author makes a slur toward classes of people that benefit from privilege in fact not a slur at all but just an angered insult at its most powerful must be considered, particularly in comparison to the depiction of Black bodies as enslaved or “naturally” criminally suspect, women’s bodies as objects or meat, and so on. Activist Malkia Cyril pointed out in a Facebook Hard Questions panel discussion on the topics of hate speech and free speech that this is likely a matter of “equity” instead of poorly considered “equality” when it comes to assessing users’ statements on the platform:

“It’s not about speech, it’s about power. People are actually dying on the streets because of the speech that’s taking place online. So let me be clear about it, it’s not a question of whether an individual has a right to say whatever’s on their mind. That’s a strict US constitutionalist view of the question of free speech, that’s not my view of free speech. […] What I care about is whether or not anti-Semitic crimes are going up as a direct result of people on a platform allowing individuals to organize criminal activity online.” (Malkia Cyril in Facebook’s 2018 Hard Questions: The Line Between Hate and Debate)

In this article I have addressed in brief some of the ways in which a small group of progressive activists on Facebook used the social media platform to respond to the shaping of public discourse as it relates to hotly contested ideas around hate speech. The group attempted to shape minds and opinions, among themselves and onlookers, with respect to circumstances that they had little control over - that being mostly unilaterally decided upon moderation policies that did not fit within their moral political and activist frameworks. In so doing, the group formulated and reinforced an identity related to their beliefs about free speech, inequality, and their shared progressive value system. Echoing the criticisms published in a continuing stream of news articles (Lima 2021, Marantz 2021, pointing out the gaping flaws in Facebook’s moderation practices and their corporate leadership’s moral and political choices regarding the latter, the group addressed, in a localized manner, the problems of unilateral power that the corporate leaders of social media platforms such as Facebook have not been forced to come to terms with in more democratic ways. In this sense, if these problems are not checked through democratic and more thoroughly considered social scientific means, these platforms could well, as Zuboff (2019) and Persily & Tucker (2020) warn, compromise the very institutions that have given them such vibrant life.

References

Angwin, J. & Grasseger, H. (2017). Facebook’s Secret Censorship Rules ProtectWhite Men From Hate Speech But Not Black Children. Retreived 11/16/2021

Brodsky, S. (2018). Everything to know about Facemash, the site Zuckerberg created in college to rank ‘hot’ women. Metro. Retrieved November 16, 2021

Bucher, T. (2021). Facebook. Cambridge, UK: Polity Press.

Coates, J. (2007). Talk in a play frame: More on laughter and intimacy. Journal of Pragmatics 39: 29-49.

Dennis, J. (2019). Beyond Slacktivism: Digital Participation on Social Media. Cham, Switzerland: Palgrave Macmillan.

Facebook. (2018). Hard Questions: The Line Between Hate and Debate. Facebook Newsroom. Retrieved 11/12/21

Gillespie, T. (2018). Custodians of the Internet: Platforms, Content Moderation, and the Hidden Decisions That Shape Social Media. New Haven: Yale University Press. https://doi.org/10.12987/9780300235029

Graeber, D. (2004). Direct Action: An Ethnography. Chico, CA: AK Press.

Grimmelmann, J. (2015). The virtues of moderation. Yale Journal of Law & Technology 42: np.

Huddleston, T. (2017). Facebook’s hate speech rules make ‘White Men’ a protected group. Fortune. Retrieved 11/16/2021

Jefferson, G., Sacks, H., and Schegloff, E. (1978). Notes on laughter in the pursuit of intimacy. In: J. Button & J.R.E. Lee (eds) Talk and Social Organisation. Clevedon: Multilingual Matters, pp. 152-205.

Lima, C. (2021). A whistleblower’s power: Key takeaways from the Facebook Papers. The Washington Post. Retrieved 11/16/21

Marantz, A. (2021). Why Facebook can’t fix itself. The New Yorker. Retrieved 11/16/21

Myers-West, S. (2018). Censored, suspended, shadowbanned: User interpretations of content moderation on social media platforms. New Media & Society 20(11): 4366-4383.

Oremus, W., Alcantara, C., Merrill, J., & Galocha, A. (2021). How Facebook shapes your feed. Retrieved 11/16/2021

Persily, N. and Tucker, J. (2020). Introduction. In: N. Persily and J. Tucker (eds) Social Media and Democracy: The State of the Field, Prospects for Reform. New York: Cambridge University Press, pp. 1-9.

Reinstein J. (2018). Mark Zuckerberg Tells Congress: No, Facebook Wasn't Invented To Rank Hot Girls, That Was My Other Website. BuzzFeed. Retrieved 11/16/2021 f

Roberts, S. (2014). Behind the Screen: The Hidden Digital Labor of Commercial Content Moderation. PhD Thesis, University of Illinois at Urbana-Champaign.

The Dangerous Speech Project (2021). Dangerous Speech: A Practical Guide. Retrieved 11/16/2021

Treré, E. (2019). Hybrid Media Activism: Ecologies, Imaginaries, Algorithms. New York: Routledge.

Washington Post (2018). Facebook reveals its censorship guidelines for the first time - 27 pages of them. LA Times. Retrieved 11/16/2021

Zentz, L. (2021). Narrating Stance, Morality, and Political Identity: Building a Movement on Facebook. Routledge.

Zuboff S (2019). The Age of Surveillance Capitalism: The Fight for a Human Future at the New Frontier of Power. New York: Public Affairs.