Dangers of being trapped in an echo chamber

There was a time before social media. Something that most Gen Z cannot imagine. There was no Twitter, Facebook or any other social platform that would provide news. Media companies were the gatekeepers of the news, and the news was shared through television and newspapers. Nowadays, many people get their news from social media. This, however, can create an echo chamber, which can have many effects. But, what exactly is an echo chamber, and what does it have to do with algorithms or fake news? And is there a solution to these echo chambers?

The echo chamber phenomenon has gained a lot of public and academic attention. It is a phenomenon where a heterogeneous population segregates into a network neighbourhood, that mostly consists of like-minded individuals. This is the echo chamber formation. It is a place where people who are on the same wavelength, almost solely interact with one another. With this repeated contact, it can lead to the strengthening or intensification of ideas and views. These echo chambers exist both online and offline and can affect people's opinions and behaviour. They can be seen as a threat to open and beneficial discourse. Even though both online and offline echo chambers can cause problems, this article will solely focus on the online version.

Algorithms and the daily me

Back in the 1990s, technology specialist Nicholas Negroponte predicted the arrival of "the Daily Me": He suggested that someone would not only rely on the local newspapers, but they would be able to design a communications package, that was created just for them. This meant that if somebody only wanted to focus on football or a specific writer, that was possible. "The Daily Me" does not exist, or maybe not yet. Most Americans receive most of their news from social media platforms. Facebook is the most crucial platform on that matter. It has become central to the experience of the world for many people. When using Facebook, people see what they want to see. If two people with completely different political views compared their feeds, it would show two radically different Facebook pages. (Sunstein, 2018)

It turns out that people do not need to make a "Daily Me" all by themselves. They are already created, or are being created right now, even without someone's knowledge. This is the age of the algorithm, and it supposedly knows a lot. An algorithm is about a series of specific rules being followed. By doing this, it can solve a distinctive set of problems and rules. An algorithmic culture is when a machine runs on complex formulae and engages in things that are seen as traditional work of culture. It involves the sorting, classifying and hierarchising of people but also ideas, objects and places (Granieri, 2018). Algorithms on social media are part of the algorithmic culture; based on relevancy, they sort the posts in a users's feed as they learn about the user.

Figure 1. Daily Me, a communications package created especially for the user

The algorithm creates something that is similar to a Daily Me, something that is personalised, in seconds. It knows what sort of music, movies or books the users like, but also their political views. For instance, Facebook probably knows what someone's political stance is, and can inform others what it is. If someone likes specific ideas, but not others, it is not difficult to make a political profile. This machine learning can be and is used to create small differences. It not only sorts one's political view, right or left, but also topicssomeone is passionate about, such as equality, the environment, security, discrimination or racism. All of this can be incredibly valuable for other parties like advertisers, fund-raisers, but also political extremists. (Sunstein, 2018)

The hashtag is also an example of instant personalization as it is a fast and straightforward sorting mechanism. It is as simple as searching for #feminism and posts that are of interest will appear (because they had that specific #). The hashtag was created for people to help them find posts, tweets and information. It is fast, simple, and the Daily Me becomes more of a MeThisHour. When people post or tweet using specific hashtags, they promote certain ideas, perspectives, persons, products, facts and eventually actions. Many individuals support these developments, as they can increase fun, ease, amusement and learning. Not everyone wants to read news about nationalpolitics on their screen, so why not change it? It seems like a logical thing to do. However, the architecture of control has a severe downside. This downside can raise questions about democracy, freedom and self-government. Social media allows people to make their own feeds and practically live in them, which can cause serious problems (Sunstein, 2018). And so, hello echo chambers.

Echo chambers

An echo chamber is seen as a metaphor that captures how messages are amplified and reverberate through the traditional opinion media. It creates a common frame of feedback loops for those who read or watch these media outlets. In these chambers, the echoing at times is literal. Media outlets in a specific echo chamber will quote each other and most importantly legitimise the other. (Jamieson & Cappella, 2010) This creates an environment where only specific statements are being told. People surround themselves with others who have the same view and opinions as they have. The mass media outlets create some sort of boxes by inclining in a specific way. It does, however, not lean right or left, but to the user itself. It is a bounded, enclosed media space that can magnify the messages within it, but can also insulate them from rebuttal. South Park phrases it beautifully, as a personal safe space.

When an echo chamber is filled with misleading or fake news, it can create all sorts of problems.

In my safe space is a song from South Park, that beautifully captures the essence of an echo chamber. The lyrics already begins with 'everyone likes me and thinks I'm great in my safe space. People don't judge me'. This refers to the like-minded people that are in an echo chamber. There are more parts of the lyrics such as 'there is a very select crowd in your safe space. People that support me and say nice things' that also refer to the individuals that think alike. Other parts like 'if you don't like me, you are not allowed in my safe place' refer to the people that either live in another echo chamber or do not live in an echo chamber at all and can see issues from other views. (Hsiang, 2018)

Figure 2. In my safe space, a song from South Park

As mentioned before, echo chambers can create all sorts of problems. When social media allows people to create their own feeds and live in them, it will enable providers to create personalised or gated communities, for that specific user. People will self-isolate, which can cause untruths, but it can also promote separation and fragmentation. Quite some people do not want to live in an echo chamber. They want to learn about a whole range of topics from many points of view. They are open-minded people and want to discover the truth. Others might actually like these personal safe spaces and want to live in them. They prefer to hear views that are like their own and do not like to be challenged (Sunstein, 2018). Their view is influenced by the echo chamber and has been fixed to reject any evidence that might contradict their own beliefs. The trust of these people is conveyed and focussed inside the chamber, and they distrust standard sources.

However, this is where lines between truth and untruth can become blurry. Before social media, a filter was provided by media companies who were the gatekeepers of the news. Has that filter disappeared? Yes and no. The sole filter of the media companies has been long gone. Nowadays, the filter is the people. But since they were not trained to do so, algorithms, and thus echo chambers, can amplify the effect, for instance, the impact of fake news.

Fake news

Fake news have been around for quite some time and have definitely been used in the past. Leaders in Nazi-Germany already used the term fake news to blame media for lying. Fake news is nothing new, but it did regain much attention since the 2016 presidential election in the United States. Fake news has many definitions and is seen as a complicated concept. Some academics have been using the term fabricated or junk news. Fabricated news is the sort of news that looks really real and authentic, but is not based on reality. Junk news is a bit broader, as it is more specific about what it includes. It includes all kinds of propaganda, conspiratorial political information and news (Quandt et al., 2019).

Other academics still use the term fake news, but they do narrow it down and only refer to information that imitates professional media outlets (pseudo-press). This fake news can look very real but can be very misleading. Sometimes, social media platforms can function as mediators. When a user clicks on a link, they can get a warning that the link is possibly unsafe. They give warnings and labels when they think a particular news piece is manipulated or has untruths in it. It tries to prevent the user from engaging with something that can be harmful (Quandt et al., 2019).

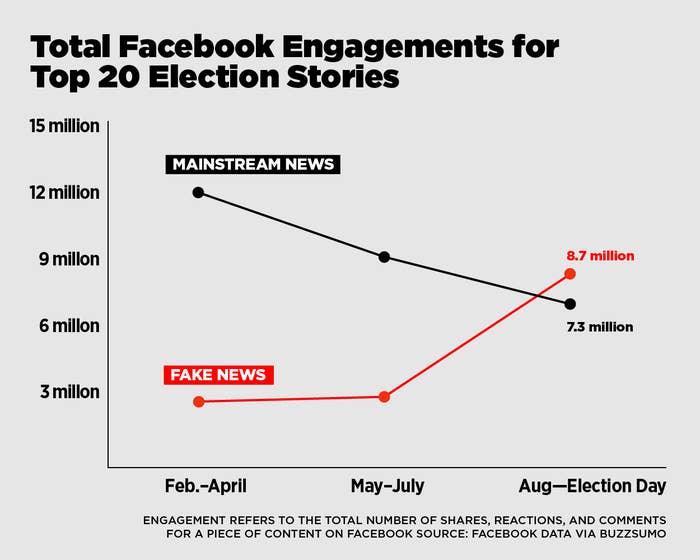

Since the era of the web, the world has become smaller, and people can find like-minded people more quickly and easily. The access to information is unlimited, as a Google search is often only one click away. This has both pros and cons. Yes, finding people that share views and opinions are just one click away. And this is great, because people can engage in conversations. However, since it is so easy to find these people, it is also much easier to go down a rabbit hole that is echoing one-sided opinions. Because the web also makes it possible to share fake content and half- and untruths. Sometimes people even engage more in the fake news pieces, than the real and legitimate news outlets. This could be seen in the elections of 2016 in the United States.

Figure 3. An analysis that shows more engagement in fake news than mainstream news at the 2016 U.S. elections

When fake news circulate within an echo chamber, it is difficult for people to see the truth. Because if fake media outlets quote one another and legitimise them, how can people within that chamber still know what is real or not? Moreover, this is not just about small groups of people. No, the digital media era has enabled media outlets to reach hundreds of thousands of people with only one click. However, when users fall for this fake news and share it on their feed, they immediately become perpetrators as they share it with people who trust them. This creates a perfect feedback loop (Solon, 2018).

Solutions

So how can these feedback loops and echo chambers be broken? Within these echo chambers, people are not exposed to other views or opinions. So the easiest solution would be, exposure. Creating more public forms so that it could be an option for people to run into other views. However, in this digital age, people have to be more critical about what they read but also need to have digital literacy. They need to understand that the algorithms and echo chambers can help find like-minded people; but also that same mechanism can work against them. Social media platforms acting as mediators (van Dijck, 2013) is another option. They can try to deal with fake news as they could give warnings and labels when they think a news piece is manipulated or filled with misinformation.

On balance, echo chambers are a phenomenon that has gained more and more attention over the years. It is a safe place where people find other like-minded persons that share their views and beliefs. These chambers are enforced by algorithms that know what the users want. Algorithms create something that is like a Daily Me, but quicker. When an echo chamber is filled with misleading or fake news, it can create all sorts of problems. And the web has made the world smaller and more accessible and thus it is more likely that fake news can be spread. One way to end the consequences of echo chambers, is to create more public platforms so people can run into more views than just their own. Suppose the filter that the algorithms provide was reduced, people would be exposed to more new and diverse opinions. But, even with these solutions, it is up to the people what they want to believe.

References

Dijck, J. (2013). The Culture of Connectivity. OUP USA.

Hsiang, D. (2018, 31 March). Blame Machine Learning for Your Echo Chamber - Derek Hsiang. Medium.

Jamieson, K. H., & Cappella, J. N. (2010). Echo Chamber: Rush Limbaugh and the Conservative Media Establishment (Illustrated edition). Oxford University Press.

Quandt, T., Frischlich, L., Boberg, S., & Schatto‐Eckrodt, T. (2019). Fake News. The International Encyclopedia of Journalism Studies, 1–6.

Sunstein, C. R. (2018). #Republic: Divided Democracy in the Age of Social Media (Updated edition). Princeton University Press.

Tufekci, Z. (2015). Algorithmic harms beyond Facebook and Google: Emergent challenges of computational agency. Colorado Technology Law Journal, 2015(Vol. 13), pp 203-218