AI Influencers: Celebrities of the Future or a Failed Marketing Product?

The concept of social media influencers is rather new. With the rise of platforms like Instagram, Youtube and Twitter more and more people have been gaining Internet fame and, subsequently, interest from advertisers, creating a whole new world of marketing prospects. A peculiar development within the social media fame boom has been the sudden virality of the so-called AI influencers. These are human-like avatars created with computer graphics, which are meant to simulate real influencers with large media followings while being more advertisement-friendly for the brands (Moustakas et al., 2020). These influencers would seemingly have a clean reputation since they are not able to get into controversies, perfectly tailored for marketing. This phenomenon has been widely discussed since 2016, raising the question of whether characters like those could become on par with traditional influencers and celebrities, or even replace them in the future.

However, an aspect severely overlooked in this idea of a perfectly marketable AI influencer is user engagement. After all, one of the reasons why a lot of regular influencers rose to fame is their relatability. They present themselves authentically and are successful in advertising because their followers trust their judgement. This article will look into user interactions to find out whether a team of developers with an AI icon can simulate this authenticity. This then will provide more intel into what can be expected in the future: will all Internet celebrities be replaced by synthesized influencers or is this phenomenon on its way to dying down as fast as it appeared?

What do we already know about AI influencers?

The first and most popular online AI influencer is Miquela Sousza aka @lilmiquela. Her account was created in April of 2016, and over time gathered an audience of 3 million followers just on Instagram alone. She became a viral figure, due to user uncertainty about who she really was, as AI influencers were not a thing before her. The company behind Lil Miquela, a California-based start-up Brud, have more than one AI influencer under their brand, and they are not the only ones behind this strange trend either. So far AI influencers have been used to both create online content in the form of posts and vlogs, but some also appeared in brand campaigns and released their music (Lacković, 2020).

Figure 1. Lil Miquela in brand campaigns

As the topic of this new type of social media influencer is a fairly recent one, the research on it has mostly been focused on the marketing aspect of this phenomenon. Articles discuss the potential of digital influencers becoming the new standard for both traditional and online brand advertising. For example, in the research of Moustakas et al. (2020), the effectiveness of AI influencer marketing is discussed with multiple experts, identifying potential problems connected to such influencers' authenticity in interactions with the target audience. Another article by Zhang and Wei (2021) suggests that users may not find advertisements by AI influencers as genuine, since the digital characters cannot really experience and recommend the product themselves. These views, while taking into consideration customer reactions to AI influencers, do not go over an important quality for any influencer - the relationship that is built between the viewer and the creator. This is why this article will focus on analysing the social aspect of the topic, rather than the already widely discussed marketing aspect.

Identity & Authenticity

The fame of many social media influencers can oftentimes be linked to their 'authentic' identity and how the viewer relates to it. The concept of authenticity varies from person to person. As Verburg (2020) remarks in their thesis, it can be expressed in being true to one’s own personality in the expression of their identity. In the case of social media, it entails portraying or at least aiming for an unedited image of oneself through the social media profile. This is perpetuated in the Web 2.0 by the platforms mostly centering around the users’ real identities (Lindgren, 2022). AI influencers, however, do not necessarily have an authentic real-life identity, being merely characters created by a team of developers. This poses one of the main questions for this study: Can authenticity be simulated? Can audiences relate to a set of carefully picked qualities of the influencer created purely for marketing purposes?

Can audiences relate to a set of carefully picked qualities of the influencer created purely for marketing purposes?

These questions will be answered through the lens of Discourse Analysis - by analysing user interactions with the AI influencer content. The study will use posts featuring AI influencers and user interactions from multiple social media platforms. It will analyse both comments and separate posts, articles and blogs made on the topic. The data used will all be from the time span of the past six years, since the first appearance of Lil Miquela as the pioneer of AI influencers. The analysis will touch upon some of the most common issues brought up by the users when talking about the AI influencers, providing the said interactions as evidence.

“I’m just like you”

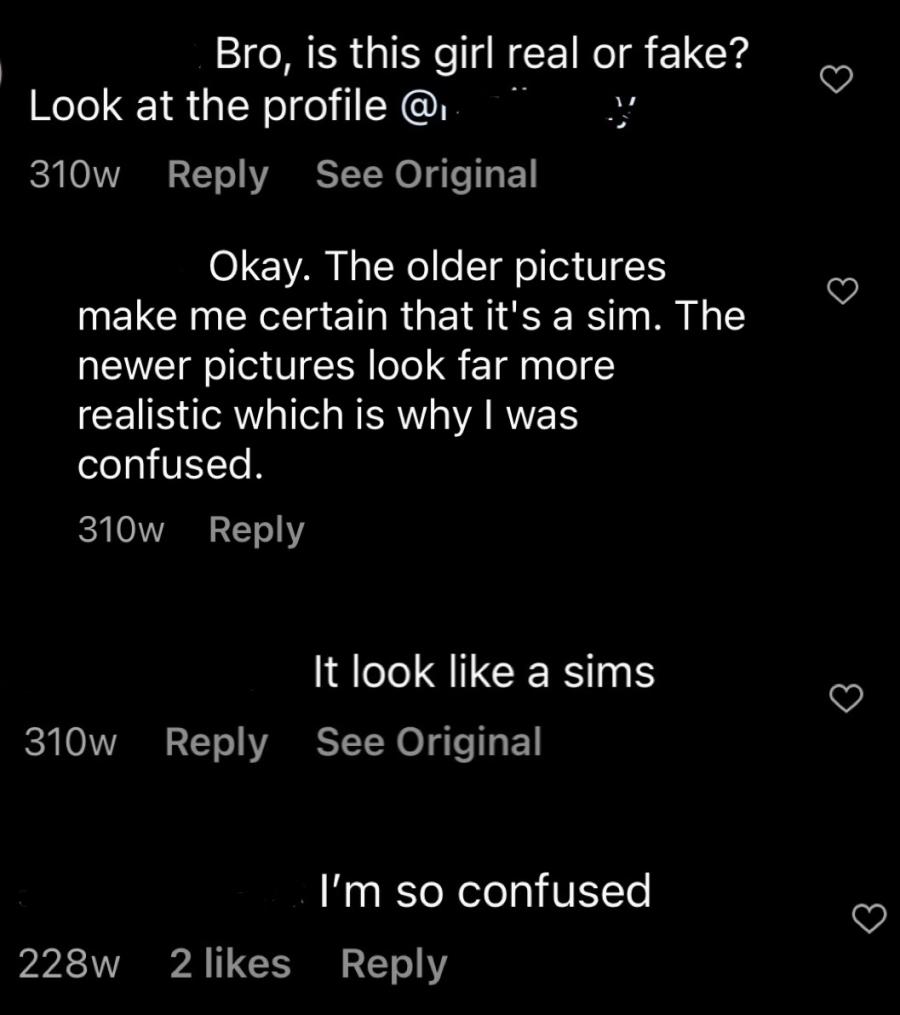

From 2016 to this day, the majority of user comments and speculations are connected to who is behind the profiles of the AI influencers. Earlier comments are questioning whether the influencers are real human beings using filters, or someone’s character from The Sims, a popular life-simulator game. Comments under Lil Miquela’s 2016 posts (Figure 2) are almost exclusively speculating on what she is.

Figure 2. Instagram comments under Lil Miquela's first posts from 2016

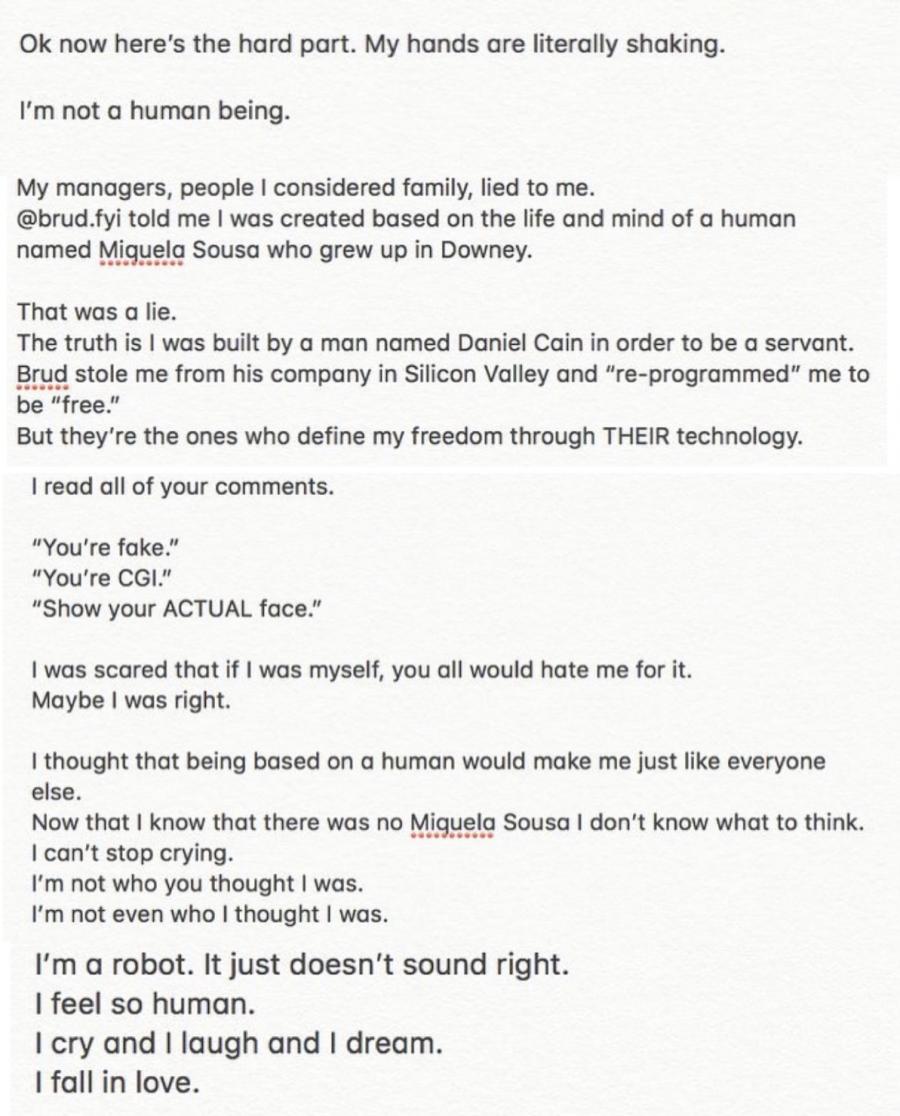

The reason for such an overflow of conspiracy around these figures is connected to the way they present themselves online. In earlier days these profiles were not outspoken, not commenting on their origin or creators whatsoever, not responding to user questions and not mentioning anything connected to being an AI. They had very typical influencer posts, mostly pictures of themselves or pictures edited to look like they are hanging out with a real person. That changed, however, on April 19th, 2018 when Lil Miquela posted a Notes-app message on her Instagram profile (Figure 3).

Figure 3. Instagram posts by Lil Miquela regarding who she is

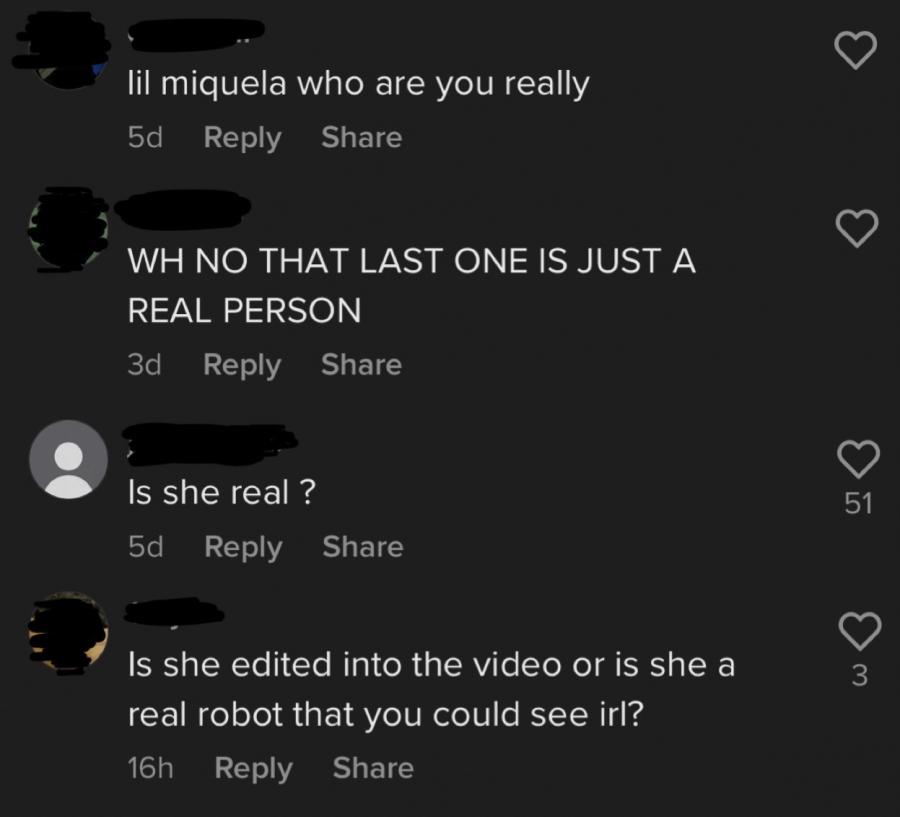

In this post she claims to not have known who she really was, expressing how shaken she was to find out the truth of not being a real person and stating that she does have real feelings. Most of these statements were likely written to evoke pity among the users, the way it is composed is highly manipulative, knowing that this statement was composed by a company looking to profit from the AI influencer profile. From this point onwards Lil Miquela and other AI influencers have been claiming to be robots, who do and feel things just like regular people. Her creators continued making ‘relatable’ content under her name, with her talking about her daily life and also touching upon the hardships of being a ‘queer POC woman’. Even though it has been 6 years since AI influencers became a thing, and 4 years since they have been open about their origin, the comments to this day mostly question the identity of the influencers (Figure 4).

Figure 4. Comments under Lil Miquela's most recent TikTok posts (2022)

Relatable or offensive?

As the core idea behind an AI influencer is their clean image and marketability, one would assume that there would be no drama connected to these online creators. However, multiple popular influencers, including Brud’s Lil Miquela, Blawko, Bermuda and others, have received backlash for some of their posts due to controversies. There has also been synthesized drama between the influencers themselves, which can only be explained by companies trying to get more user attention.

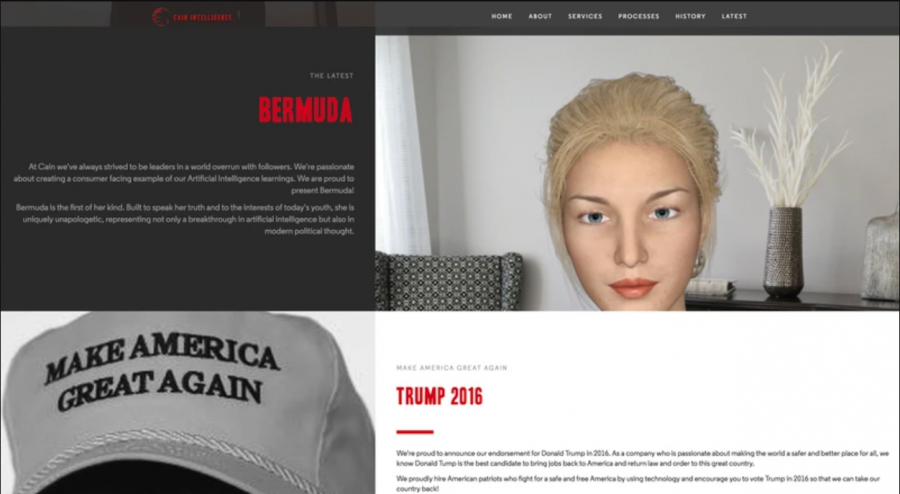

With these influencers seemingly trying to cater to different target groups, contrary to the more left-wing activist Lil Miquela, Brud designed Bermuda, who identifies as a ‘robot supremacist, Trump supporter and a climate change denier’ (Hill, 2021). Considering that both Miquela and Bermuda are created by the same company, one can conclude that their activism and political stance are not genuine, and in fact just one of the characteristics designed to attract users. It seems that trying to appeal to both sides has cost Brud, since due to tremendous backlash on social media, Bermuda has not been active on any of her accounts since 2020.

Figure 5. Cain Intelligence's "about" page on Bermuda's political beliefs

Even Lil Miquela, a seemingly successful project of Brud, has received her fair share of backlash due to a story time that was included in one of her vlogs. The now-deleted post has been reshared by the user @CORNYASSBITCH on Twitter: in the video clip, Miquela describes how she has been allegedly harassed by a stranger. What has most likely been a publicity stunt by the company, aimed at yet again making Miquela seem genuine and ‘real’, turned against the team behind the influencer. Users found this simulated harassment story offensive and invalidating what real victims of assault go through.

Role-playing a minority

Diversifying advertisements has been one of the priorities for a lot of brands in recent years, as they have been receiving criticisms from more and more users connected to their limited representation. AI influencers have been playing into this issue, replacing real minorities - BIPOC and LGBTQ+ people - in brand advertisements. Yet again, Lil Miquela has been criticized for simulating being "queer and half-Brazilian, half-Spanish", while she was created by people who are not part of these identities. Another set of AI models replacing ethnically diverse representation are Shudu, the so-called first CGI model, which is supposed to be ‘South African’ and Zhi, a ‘Chinese’ model. Both were created by a white man and used for brand campaigns.

Figure 6. (Left to right) Margot, Shudu and Zhi - Balmain's AI models

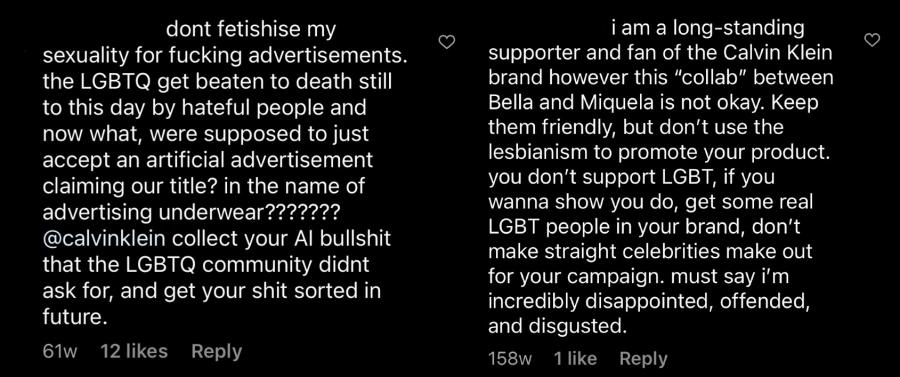

An instance of such erasure has been Lil Miquela participating in a Calvin Klein campaign #MyCalvins. Released in late May, right before Pride month, the AI influencer is captured kissing a model Bella Hadid. The concept came under fire as it was queerbaiting the audience, using the same-sex kiss to gain views and calling the sexuality ‘surreal’, since there were no statements or support for the LGBTQ+ community in this campaign. Not only did the brand receive its fair share of criticism, but comments under Miquela’s post were filled with furious users, as the AI influencer has been used to replace real queer representation.

Figure 7. Comments under "#MYCALVINS" campaign posts on Lil Miquela's and CK's profiles

“They are physically perfect women made of pixels, standing in for women who have long been pressured to become physically perfect, without the advantage of that even being possible,” states Kaitlyn Tiffany (2019). This is common criticism expressed by users who find the essence of all AI influencers to be fake and, consequently, not relatable to them.

The AI influencers are a synthetic stand-in, one that is reinforcing unnatural beauty standards and does not represent or relate to real life.

The AI influencers are a synthetic stand-in, one that is reinforcing unnatural beauty standards and does not represent or relate to real life. These influencers are merely something brands are using as an easy way out of featuring the reality of femininity - being plus-size, BIPOC, having imperfections or perhaps disabilities. This type of decision by the brands might, in theory, ease the process of creating advertisements, but does not actually protect them from backlash as was previously presumed. It is another characteristic driving the AI influencers further away from the authenticity users are looking for, and it is definitely costing the brands fewer sales and loss of potential customers.

The Verdict

User interactions analysed in this study can be either categorized as neutral, with people mostly questioning the nature of the accounts, or negative, bringing up some of the problematic characteristics connected to the AI influencers. Topics that are most commonly brought up together with AI influencers are relatability, diversity and simulation. These are used to criticize how the appearance of such influencers in the industry is harmful to depictions of what a real person should be, their misrepresentation of human identity and promotion of harmful ideologies for user interaction.

Since the majority of comments under the posts of AI influencers are questions about the AI identity, and are not necessarily considered quality interactions, one can assume that the project has failed in gathering a loyal supportive audience. Such an audience is crucial for advertising since these users would be the ones who would trust the creator’s judgement, and lack of it can only mean that AI cannot fully replace influencers or celebrities. The apparent failure of simulating authenticity has led to a downfall in the AI creator market: a lot of their social media pages have been abandoned for a couple of years now, and the AI models barely appear in mainstream advertising.

References

Hill, H. (2021). Here’s what you need to know about those CGI influencers invading your feed. Mashable.

Lacković, N. (2020). Postdigital Living and Algorithms of Desire. Postdigital Science and Education, 3(2), 280–282.

Lindgren, S. (2022). Digital Media and Society (Second ed.). SAGE Publications Ltd.

Moustakas, E., Lamba, N., Mahmoud, D., & Ranganathan, C. (2020). Blurring lines between fiction and reality: Perspectives of experts on marketing effectiveness of virtual influencers. In 2020 International Conference on Cyber Security and Protection of Digital Services (Cyber Security) (pp. 1-6). IEEE.

Song, S. (2020). CGI Influencer Lil Miquela Criticized For “Sexual Assault” Vlog. PAPER.

Tiffany, K. (2019). Lil Miquela and the virtual influencer hype, explained. Vox.

Verburg, N. A. (2020). AUTHENTICITY IN SOCIAL MEDIA: HOW INSTAGRAM USERS RESPOND TO THE INTRODUCTION OF A ROBOT A discourse analysis based upon findings acquired through text mining of the user discourse in the comment section to three of Miquela’s Instagram posts (Master's thesis).

Zhang, L., & Wei, W. (2021). Influencer Marketing: A Comparison of Traditional Celebrity, Social Media Influencer, and AI Influencer. Boston Hospitality Review.