Data voids: What are they and do they threaten democracy?

Data voids can be a major problem for search engine companies like Google, Bing and YouTube. Media manipulators use them in order to spread hate, racism, fake news and incitement to violence. The implications of false information encountered online can impact our democracy. Data voids are thus not only a problem for internet companies but also a potential threat for democracies.

What is a data void?

Since the advent of the Internet, much has changed in our society. More and more people turn to search engines like Google, Bing, and YouTube as a primary source for information. Golebiewski and boyd (2019) explain in their report Data Voids: Where Missing Data Can Easily Be Exploited that “There are many search terms for which the available relevant data is limited, nonexistent, or deeply problematic. Recommender systems also struggle when there’s little available data to recommend. We call these low-quality data situations “data voids” (p. 5). Data voids, consequently, can lead searchers to “low quality or low authority content because that’s the only content available” (p. 5). In this article, I will explain how data voids come to exist and what their implications are for our current media system and democracy.

The concept of data voids was first coined by Golebiewski and boyd (2019), who explain that “These voids occur when obscure search queries have few results associated with them, making them ripe for exploitation by media manipulators with ideological, economic, or political agendas” (Golebiewski & boyd, 2019, p. 2). The data void itself is not the problem, but rather the space that it creates for media manipulators to share false information.

Users of these platforms can stumble upon misinformation while searching or could be led to it through the interference of ‘media manipulators’. Search engines work with massive amounts of data that are meant to provide searchers with the information they are looking for. However the amount of results you encounter depends on the specific term, or combination of terms, that you search for. Furthermore, quality of the information as provided can vary from high-quality, legitimate news, to low-quality, problematic content.

One example is the word ‘basketball’. When googling this word you will encounter many results that vary from the basketball as an object to the nearest and most recent basketball game. Your results are based on your current location and on search engine technology that bases its logic on what previous searchers were looking for.

Search engines work with massive amounts of data that are meant to provide the searcher with the information they are looking for. However the amount of results you encounter depends on the specific term, or combination of terms, that you search for.

When searching for something common like 'basketball' you will probably not encounter too many problems, but what happens when you type in random letters? You will probably get little to no results. “There is a long tail between a term like “basketball,” which promises a seemingly infinite number of results, and one with zero results. In that long tail, there are plenty of search queries that can drop people into a data void rife with existing but deeply problematic results” (Golebiewski & boyd, 2019, p. 5). Problematic content might be of low quality or low authority and “some of these data voids are intentionally exploited to introduce disturbing content, while others are created to promote political propaganda” (Golebiewski & boyd, 2019, p. 5).

According to Golebiewski and boyd (2019), there are five different types of data voids. I will discuss briefly how these occur and how media manipulators might look for them to spread content that we consider to be problematic.

- Breaking news data voids emerge after a major news event occurs. The combination of different terms associated with this specific event will lead to gaps in search inquiries that need to be filled with content. In this process of content production, media manipulators have the opportunity to link their content to these specific terms.

- Some media manipulators will create what Golebiewski and boyd call strategic new terms. In this case, information is created and linked to terms that only connect to this problematic content. The coronavirus, for example, has opened doors for manipulators to spread problematic content, as explained by Maly (2020).

- Outdated terms are less likely to be searched for, but they can, however, be used to connect new, problematic information to these terms. There is no new content being made that applies to outdated terms, and this leads to openings for those who want to spread problematic content.

- Fragmented concepts are data voids used by manipulators to connect seemingly unrelated terms to the content they want to spread. As an example, we can look at Maly's (2019) research on how the Alt-right used an interview with Charlie Kirk to spread a new term that was directly linked to content against him.

- Searching for a problematic query will automatically lead to content that might not be factual. Those looking for information that could be linked to conspiracy theories, for example, could stumble upon information that ‘proves’ those theories rather than disprove them. “Many conspiracy theorists, hate groups, and media manipulators attempt to push searchers to use specific, problematic search queries they know will lead to these voids” (Golebiewski & boyd, 2019, p. 34).

The internet's influence on democracy

In our democracy, relevant and reliable information is important for maintaining a healthy public sphere. The idea of the public sphere as explained by Habermas (1991) in his book The Structural Transformation of the Public Sphere: An Inquiry into a Category of Bourgeois Society, is that people in a society can come together to discuss important issues that are common to all. This concept helps us visualize how democracy is built and how we can maintain it. One of the affordances of technological development is that it opens up the public sphere and at the same time creates smaller niches. We can encounter others more easily on social media and information spreads faster than ever. This could be beneficial, as we can share our thoughts and encounter ideas outside of our own experience more easily. But differentiating true from false information can be more difficult for those that come across it online.

Social media are not the only drivers of this change in handling information online. The influence of search engines and the massive amounts of information in reach for everyone with internet access has had an impact on society. As Vaidhyanathan (2012) argues, Google cultivates a specific idea of what information is useful for users: “its process of collecting, ranking, linking and displaying knowledge determines what we consider to be good, true, valuable, and relevant" (Vaidhyanathan, 2012, p. 7). Because it is completely integrated into our society, companies try to be at the top of the most searched results on Google. “Google will affect the ways that organizations, firms, and governments act, both for and at times against their “users” (Vaidhyanathan, 2012, p. 3). But we should not forget that Google and other search engines are not neutral: “its biases (valuing popularity over accuracy, established sites over new, and rough rankings over more fluid or multidimensional models of presentation) are built into its algorithms” (Vaidhyanathan, 2012, p. 7).

The influence of search engines and the massive amounts of information in reach for everyone with internet access has had an impact on society.

For many Google has become their primary source for looking up information. The potential impact of this monopoly on democracies around the world becomes more clear if we imagine what could happen when we Google a politician. Information about this person can vary from trivial stories about their personal life to campaign programs. But it gets tricky when we cannot be sure whether the information we read is true or manipulated. False information can influence how we vote and therefore greatly impact our democracy.

For example, Deepfake technology has become more and more prevalent. Deepfakes, a product of technologies that can layer different faces over that of another person and therefore change their "behavior", are being used to create fake news. Deepfakes are often videos and they can be used to create a direct threat to democracy. We are inclined to believe a video when we see it, and “disinformation can have a much greater effect than its subsequent debunking” (Parkin, 2019). These videos are most often shared through social media or spread through the use of a data void.

Data voids in our hybrid media system

Chadwick (2017) explains how our media system has become hybrid through new technologies that make shifting between different forms of media easier: “the hybrid media system is built upon interactions among older and newer media logics—where logics are defined as bundles of technologies, genres, norms, behaviors, and organizational forms—in the reflexively connected social fields of media and politics” (Chadwick, 2017, p.4).

Information today flows on different levels. Chadwick uses the Obama presidential campaign in 2008 as an example: “Campaign teams can no longer assume that they will reach audiences en masse. They now create content targeted at different audience segments and they disseminate this content across different media” (Chadwick, 2017, p. 9). Election campaigns make use of the hybrid media system to reach their audience on different channels and levels to enhance their influence on society.

The implication in using these different (online) media is that media manipulators also find ways to spread false or low-quality information. While one can encounter data that is true and of high quality when searching, one can also easily stumble across false information that is spread through a data void.

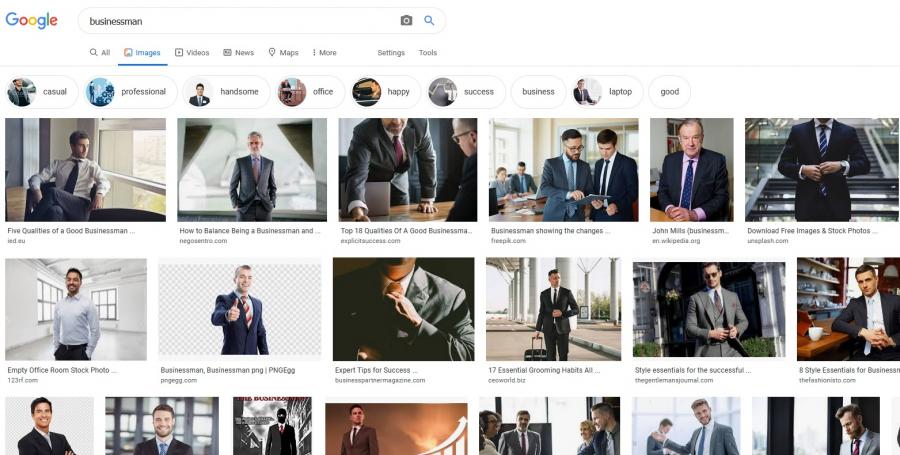

Another impact that data voids can have on our media system and likewise our democracy is bias. Potential bias by web designers can lead to a reflection of existing biases in society. For example, when searching for the term 'businessman,' your results are almost entirely pictures of white males. We should be even more careful when dealing with political content: “For politically charged content, any decision by search engine designers becomes political itself" (Golebiewski & boyd, 2019, p. 32). One can rarely be truly objective, but we need to make sure that search engines are as objective as possible.

How can we deal with data voids?

Data voids are difficult to detect and even more difficult to solve. In the vast amount of data available online, it is hard to filter out what is false and what is legitimate.

Search engine optimization (SEO) is used by content makers to prepare their content for uptake on search engines. Of course, this can also be used by media manipulators to make their misleading information easier to find or link it to popular content. For example YouTube's recommendation system sometimes suggests videos with questionable content. “Major search engines consistently struggle to alter their systems so that they return high-quality results under a constant barrage of manipulation attempts” (Golebiewski & boyd, 2019, p. 43). But even this cannot prevent data manipulators from finding opportunities to spread false information.

The main problem lies in how search engines work. “Bing and Google do not produce new websites; they bring to the surface content that other people produce and publish elsewhere on third-party platforms. Without new content being created, certain data voids cannot be easily cleaned up” (Golebiewski & boyd, 2019, p. 44). Some data voids are easier to solve than others. For example, breaking news data voids usually disappear as more high-quality information about the event is created and shown with a higher page rank on search engines.

Other data voids might be difficult to detect, and this makes for a constant battle between search engine companies and media manipulators. As hard as they are to detect, companies like Google and Bing should work on improving their systems to detect low-quality information.

To protect bias from interfering in these search engines, companies should make sure that the content shown is inclusive and as free of bias as possible. Making sure that a company has high values and preserves this inclusivity is important to the process of designing search engine systems. Governmental interference is difficult because Google and Bing are companies and not related to state control in any way.

As we have seen above, data voids are intricate systems in which media manipulators find opportunities to spread content that might be problematic or harmful to our democracy. In our hybrid media system, search engines play a bigger role than ever since many of us use them as a reliable source of information. Data voids exist in many different forms and media manipulators do their best to use these voids to share content with the broader public. The availability of reliable, high-quality news is important for creating a healthy democracy. Therefore we do not want media manipulators to interfere in politics or any other part of our society. Data voids are hard to detect, and even harder to solve. We should be aware of this and search engine companies should do everything in their power to solve data voids.

References:

Chadwick, A. (2017). The hybrid media system: Politics and power. Oxford University Press.

Golebiewski, M., & boyd, d. (2019, October). Data Voids: Where Missing Data Can Easily Be Exploited. Data & Society.

Habermas, J. (1991). The Structural Transformation of the Public Sphere: An Inquiry into a Category of Bourgeois Society. MIT Press.

Parkin, S. (2019, June 11). The rise of the deepfake and the threat to democracy. The Guardian.

Vaidhyanathan, S. (2012). The Googlization of everything (And why we should worry). University of California Press.