How WhatsApp's privacy policy raises major concerns

This article provides an in-depth analysis of the privacy policy of the messenger service WhatsApp. Its focus will be on examining the platform's privacy practices in light of the European Union General Data Protection Regulation (GDPR) privacy law and user control. Therefore the data mining tool PrivacyCheck, developed by the Center for Identity at the University of Texas, was utilised to assess the policy. PrivacyCheck analyzes privacy policies according to two criteria; user control and meeting the requirements of the GDPR. Then, it ranks a risk assessment from low risk (green) to medium risk (yellow) to high risk (red). Why people use Whatsapp given the privacy concerns around the platform will be looked at through the concept of the expository society. Finally, the article will shed light on WhatsApp as a surveillance capitalist.

WhatsApp is an internationally available freeware instant messenger platform. The app allows users to send text messages and recorded voice messages. Moreover, WhatsApp enables sending photos, documents, contacts, and gifts/emoticons. Additionally, the service supports voice and video calls and sharing a location. The app was founded in 2009 by Brian Acton and Jan Koum and bought by the information technology company Meta in 2014 for 19 billion U.S. dollars (Wikipedia, 2022).

Previous research on WhatsApp's data regulations points to various privacy concerns. In 2021, ProPublica, a non-profit investigative journalistic newsroom, published the report "How Facebook Undermines Privacy Protections for Its 2 Billion WhatsApp Users", which examines WhatsApp privacy practices. ProPublica revealed that messages sent on WhatsApp are not end-to-end encrypted which means that messages are not coded in an unreadable format that is unlocked only when the messages reach the intended reader. Instead, WhatsApp messages are mined and shared with and used by Meta the umbrella company. Additionally, Probuclica has brought to light more than a dozen instances where the criminal justice departments of the United States have sought court orders for WhatsApp's metadata since 2017. Hence, according to ProPublica, U.S. law enforcement instrumentalizes users' metadata from WhatsApp for criminal sentencing procedures. In the period 2017 to 2021, WhatsApp's rate of sharing users' confidential data with U.S. law enforcement increased from 84% to 95%. So, while the privacy setting of the messenger platform claims that users are in control over their own metadata, meaning that they are able to decide if only contacts, everyone, or nobody can see your profile photo, status, and when the app was last opened, WhatsApp fails to mention that this data is gathered and analyzed by the company regardless of what setting a user chooses (Propublica, 2021).

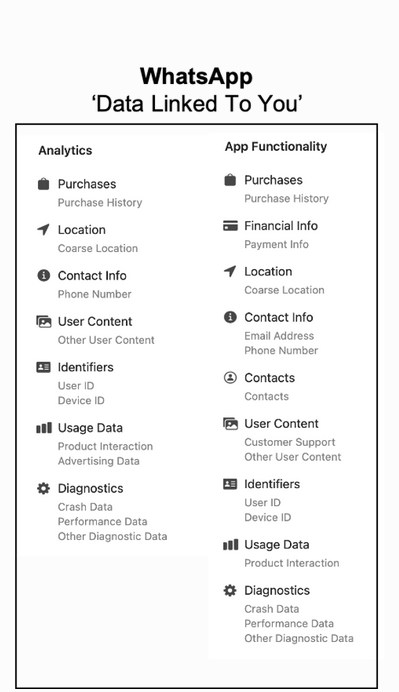

A 2021 Forbes article contributes to the examination of WhatsApp's metadata collection. It elaborates that metadata is "data about your data" (Doffman, 2021) whose power should not be underestimated in comparison to the 'actual data'. All WhatsApp users' metadata is categorized as “data linked to you" (see Figure 1).

Figure 1 Metadata (data linked to you)

Figure 1 demonstrates that metadata exposes various components of WhatsApp users' private information. Non-transparent collecting, analyzing and sharing of this information is an attack on the user's privacy.

European Union General Data Protection Regulation

The following concepts will be used for this research paper and will therefore be defined. A privacy policy is a declaration document that explains how a party collects, uses, discloses, or maintains a customer's or client's data (TechTarget, 2013).

The European Union General Data Protection Regulation (GDPR) is a data protection and privacy regulation law that was implemented in 2018 and replaced an EU Data Protection Directive from 1995. The law strives to unify data regulation EU-wide to facilitate businesses and give customers and users of these businesses control over their data (Zaeem & Barber, 2021). The regulation's key principles are:

Lawfulness, fairness, and transparency. Lawfulness means that data controllers and processers need a legal basis for their actions. This requires the consent of the data subject, contractual obligations, and legal obligations, to protect vital interests, public interest and legitimate interests. Fairness implies personal data is not processed in detrimental, misleading or unexpected ways. Transparency means that information to data subjects about what the processor is doing with their data needs to be provided. Purpose limitation means that personal data can only be collected and processed for specified, explicit and legitimate purposes. Data minimization required that collected and processed data must be necessarily limited to the specified purposes.

Accuracy dictates that personal data must be accurate and up to date. Outdated or old data must be removed or rectified. The storage limitation states that personal data must not be stored any longer than is necessary for specified purposes. Integrity and confidentiality security determine that data collection and processing should ensure appropriate security for personal data and should avert unauthorised usage or damage and loss. Lastly, accountability ensures that data controllers, as well as data processors, are responsible for compliance with other principles.

Even though the GDPR is an EU legislation, it applies to any entity that collects or processes data about EU citizens, regardless of its location (Zaeem & Barber, 2021).

Theory

Expository Society is a concept coined by Bernard E. Harcourt (2015) and describes the society in advanced capitalist liberal democracies. A society of exposure; where individuals knowingly and mostly willingly exhibit themselves. Every action leaves digital traces in our new digital age that can be collected and monitored data- mined and traced back to us. Linked together our data is constructed into a profile of who we are; our digital self. So we construct a new 'virtual transparence' that transforms our relations with others and ourselves. A new expository power emerged that constantly tracks and constructs our digital selves. This is a form of self-inflicted and participatory surveillance (Harcourt, 2015).

Shoshana Zuboff (2019) describes a new type of capitalism that is based upon surveillance and datafication: surveillance capitalism. It centralizes a new economic logic that claims private experience for the marketplace and turns it into a commodity that can be sold and purchased. So, surveillance capitalism claims personal human experience as a free source of ‘raw material’ that is collected and translated into behavioural data. These data are then combined with advanced computational abilities to create predictions of people’s behaviour. Following, these behavioural predictions are sold to business customers. Hence, there is a new kind of marketplace that trades exclusively in human futures (Zuboff, 2019).

Method

This paper aims to research the privacy policy of WhatsApp to investigate the quality of its privacy protection practices. Therefore, I have conducted recent articles investigating WhatsApp's privacy regulations from 2021 by ProPublica and Forbes. Additionally, I instrumentalized the proprietary data mining tool PrivacyCheck which was developed by the Center for Identity at the University of Texas to investigate privacy policies. PrivacyCheck analyzes privacy policies by answering ten questions concerning the privacy and security of users' data. The ten questions are:

- 1. handles the user’s email address.

- 2. handles the user’s credit card number and home address.

- 3. handles the user’s Social Security number.

- 4. uses or shares PII for marketing purposes.

- 5. tracks or shares location

- 6. collects PII from children under 13.

- 7. shares personal information with law enforcement.

- 8. notifies the user when the privacy policy changes.

- 9. allows the user to control their data by editing or deleting information.

- 10. collects or shares aggregated data related to personal information

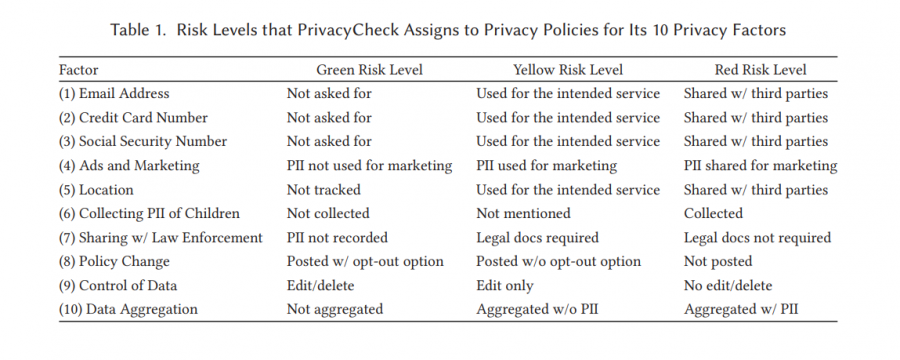

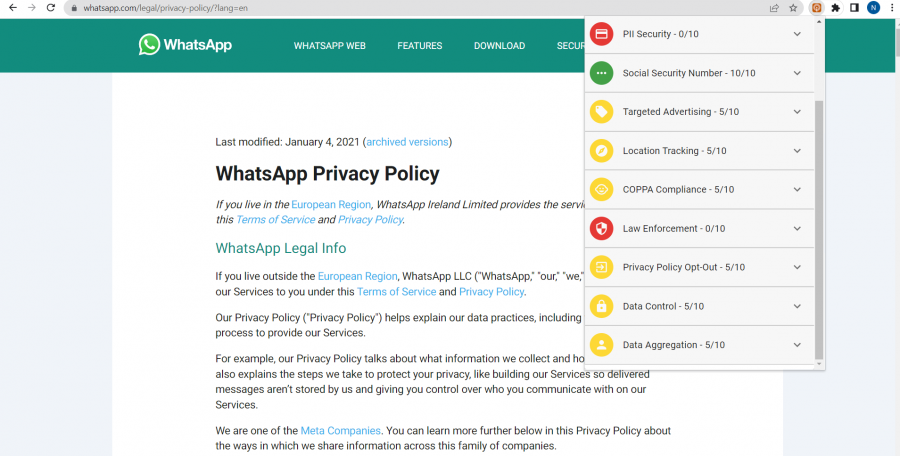

Then, it scores the privacy policy for each of these factors according to three levels of risk: high (red), medium (yellow), and low (green). See Figure 2.

Figure 2 PrivacyCheck's risk assesment for privacy policies

It also tests if the privacy policy is in line with the GDPR. After conducting a risk assessment of WhatsApp's privacy policy, I analyze the consequences of these results by using the concept of the expository society and surveillance capitalism. The research phase started on the 27th of May and ended on the 9th of June 2022.

WhatsApp's privacy policy; the privacy check

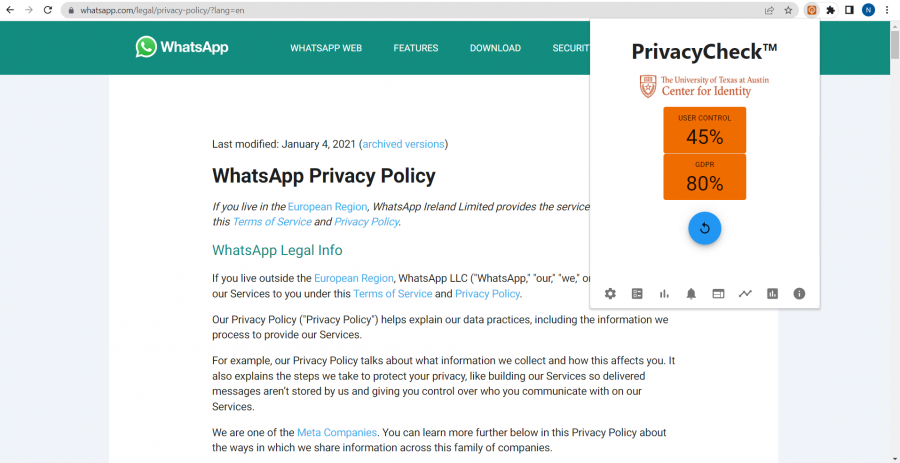

Running the PrivacyCheck tool on WhatsApp's policy revealed that the messenger app can be categorized as a medium risk level (yellow) with 45% in terms of user control and low risk (green) in regards to meeting the requirements of the European Union General Data Protection Regulation with 80% as demonstrated in Figure 3.

Figure 3 The privacy check resuts of WhatsApps privacy policy

User control

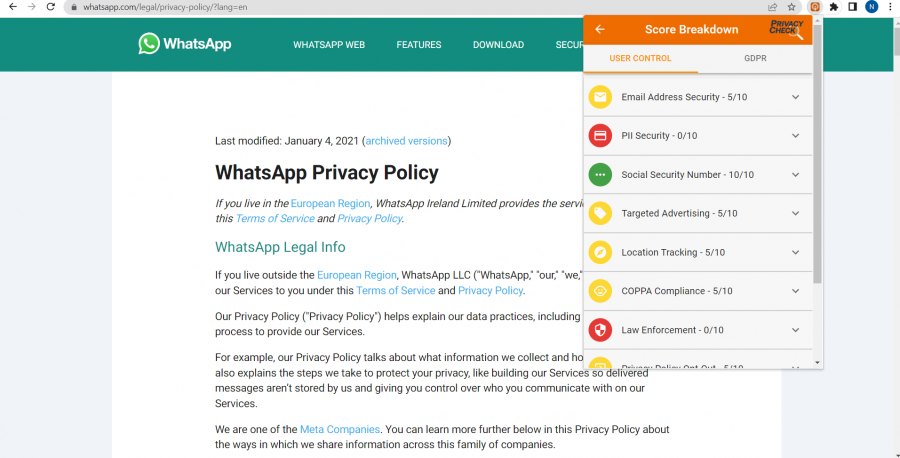

Figure 4 The privacy check resuts of WhatsApps privacy policy; user control

Figure 5 The privacy check resuts of WhatsApps privacy policy; user control

Firstly, the medium-risk category user control of WhatsApp will be investigated following the ten crucial factors elaborated above. WhatsApp asks for users' email-address and uses them for intended services. Thus, the factor is categorized as medium risk (5 out of 10). The second factor is labelled as high risk (red/ 0 out of 10) since PII (personally identifiable information) such as the user’s credit card number and home address are collected, stored and shared with third parties. The social security number is not asked for by WhatsApp. Therefore, the label is green and meets the requirements with a 10 out of 10. Personally identifiable information (PII) is collected by WhatsApp and used for marketing purposes. Hence, the factor is flagged as medium risk with a 5 out of 10. The following factor location tracking is categorized as yellow since the user's location is tracked for the intended services but not shared with third parties. The Children's online privacy protection act (COPPA) is labelled as a medium risk since the policy does not mention if WhatsApp collects PII from children under 13. The privacy policy check confirms ProPublica's research that WhatsApp shares personal information with law enforcement. Therefore, the factor is labelled as high risk (red) and does not meet the requirement of user control with a 0 out of 10. WhatsApp only notifies the user about privacy policy changes without the opt-ou option. Which ranks as medium risk (5 out of 10). WhatsApp only partially allows users to control their data by enabling users to edit information and not delete it. Thus, the categorization is medium risk. For the no-risk category, WhatsApp must allow users to edit and delete personal information. Lastly, WhatsApp collects or shares aggregated data without PII. Consequently, it is labelled as medium risk (yellow) with a 5 out of 10.

European Union General Data Protection Regulation

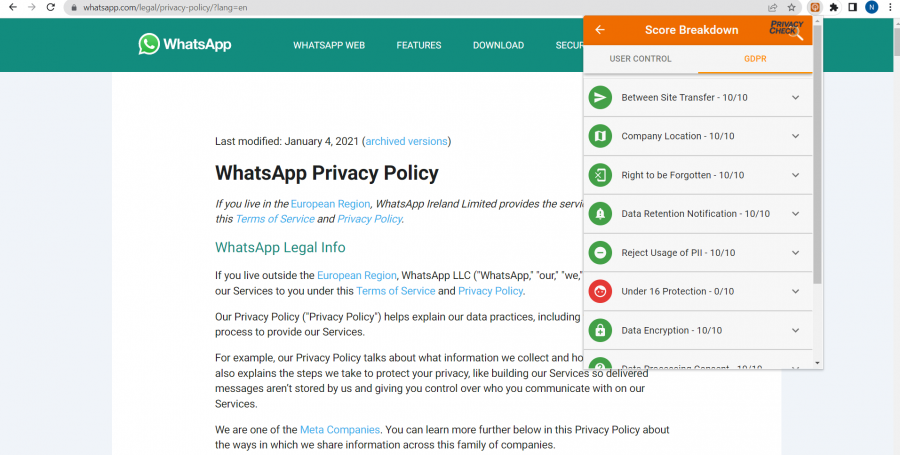

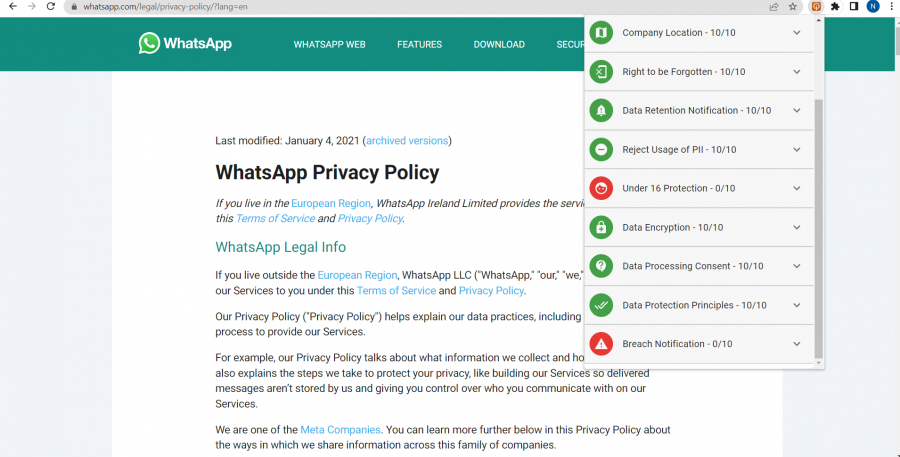

Following, it will be investigated how WhatsApp meets the GDPR requirements up to 80% on the basis of Figures 6 and 7.

Figure 6 The privacy check resuts of WhatsApps privacy policy; GDPR

Figure 7 The privacy check resuts of WhatsApps privacy policy; GDPR

Between Site Transfer is labelled as green (low risk) since the website shares the user's information with other websites only upon user consent that he provides in the privacy policy. WhatsApp discloses where the company is based and where the user's information will be processed and/or transferred. Therefore company location is labelled as low risk as well with a 10 out of 10. WhatsApp supports the user's right to be forgotten. This implies that following a request, the messenger service will delete all of the user's information. Therefore, it is categorized as low risk (green). In case WhatsApp retains information for legal purposes after the user's request for it to be forgotten, the platform will inform the affected user. According to the GDPR regulation, this categorizes as low risk (green). WhatsApp allows users the ability to reject usage of a user's PII (Personally Identifiable Information). Hence, the factor is labelled as low risk (10 out of 10). The under-16 protection is labelled as high risk (red) because WhatsApp's policy does not mention if it restricts the use of PII of children under the age of 16. WhatsApp advises the user that their data is encrypted even while at rest. Thus, data encryption is categorized as green (low risk). Likewise, data processing consent is categorized as low risk (10 out of 10) since the messenger platform asks for the user's informed consent to perform data processing. The data protection principles are also labelled green (low risk) because WhatsApp implements all of the principles of data protection by design and by default. The breach notification factor is labelled as high risk (red / 0 out of 10) since WhatsApp does not notify the user of security breaches without undue delay.

In summary, the privacy check of WhatsApp's privacy policy has revealed that it meets the mandatory GDPR restrictions with an accuracy of 80% since only 2 out of the 10 questions were labelled as high risk while the remaining 8 were categorized as low risk. However, the user control examination has exposed that WhatsApp's data collecting, mining and processing raises privacy concerns. This is underlined by the fact that the policy could only meet the requirements of up to 45%. We can conclude that WhatsApp gathers, mines, and shares its user's confidential information within the limitations of the GDPR guidelines. Additionally, a low-risk (green) rating should not be misunderstood as an indicator of users' security and privacy. This categorization only rates if WhatsApp informs the user about how the data is being utilized.

The Expository Society

In light of these results, the question arises: Why do we still use WhatsApp assuming or knowing that our personal data will be recorded, stored, monitored archived, data-mined, and traced back to us?

The answer lies within the concept of the expository society. Harcourt (2015) states that blinded by our pleasure when we text our friends, we willingly and knowingly fall victim to datafication. According to him "it is one thing to know, and quite another to remember long enough to care— especially when there is the ping of a new text" (Harcourt 2015). Therefore, distractions and sensual pleasures of the new digital age enable digital surveillance. In fact, it paves the way for a new form of self-inflicted and participatory surveillance. Linked together our data constitutes a composite sketch of who we are. The act of digital mining and profiling allows corporators to (mis)use users’ information to target advertisements, shape consumer behaviour and maximize revenues from services and goods (Westerlund, 2021). However, in advanced capitalist liberal democracies, we accept digital surveillance and pave the way for Surveillance Capitalism as outlined by Zuboff (2019).

Surveillance Capitalism

Surveillance capitalism is a new economic logic and form of capitalism that determines human experience as 'raw material' for translation into behavioural data. This "proprietary behavioural surplus" (Zuboff, 2019) is fed into machine learning algorithms and produces prediction products that are sold on the "behavioural futures market" (Zuboff, 2019). The privacy check analysis has revealed that WhatsApp as part of the umbrella company Meta mines, collects and shares users' private information and shares it with third parties. Thus, the messenger service considers human experience as 'raw material'; sources of behavioural surplus and trades them on the behavioural futures market. Therefore, seeking profit maximization and market domination, WhatsApp and Meta collect, use and benefit from users' data while bypassing user awareness. These non-transparent actions of the tech giants clearly invade the privacy of WhatsApp's/Meta's users even though they act within the limits of the GDPR. The surveillance capitalists' success based on collecting, mining and sharing users' data can be seen in the 2021 revenue statistic of Meta. According to Statistica, Meta generated approximately 114.93 billion U.S. dollars in advertising revenues in 2021. Additionally, the majority of the social network's income comes from advertising (Statistica, 2021). Since advertising becomes effective through targeting and personalization the companies depend on the 'predictions product' that is sold within the new economic logic. Thus, Meta's and WhatsApp's profit builds on exploiting users' data. The market domination and financial success of surveillance capitalists like WhatsApp and Meta demonstrate that in the digital age, "the world’s most valuable resource is no longer oil, but data" (The Economist, 2017).

Besides the invasion of users' privacy, surveillance capitalists have established epistemic inequality. Zuboff (2019) explains that private surveillance capital has institutionalized inequality of knowledge; they (the tech giants) know everything about us (users) while we know almost nothing about them (Zuboff, 2019). Since knowledge connects to power, this has also resulted in unequal power structures. Hence, we are currently entering the third decade of the 21st century with an asymmetry of knowledge and power (Zuboff, 2019). Surveillance capitalism proves that the initial hopes of the Internet democratizing knowledge through global accessibility turn out to be false and shows that it actually undermines democracy and institutionalizes inequality of knowledge and power. Finally, Zuboff (2019) states that it is more accurate to talk about surveillance policies rather than privacy policies.

Conclusion

The research on WhatsApp's privacy policy has shown that the messenger service that belongs to Meta raises privacy concerns. Our data is being collected, mined, and instrumentalized. The in-depth analysis of WhatsApp's privacy policy is in line with earlier research conducted by ProPublica and Forbes. It outlines that WhatsApp does not guarantee users' privacy or security and partially shares users' confidential information with third parties. While the messenger app meets the requirements of GDPR, it does not provide privacy but only informs the user about the data-gathering process and conducts an agreement. So, legally WhatsApp is doing nothing wrong. Morally, however, it raises major concerns.

By willingly exposing ourselves, we make it both easy and cheap for tech giants like WhatsApp and Meta to surveil, monitor and target us. Blinded by the pleasure we take out of digital practices we fall victim to datafication and participatory surveillance. This also paves the way for surveillance capitalism which sees the human experience as the predictive source of behavioural surplus and trades with 'prediction products' on the new marketplace of human futures. Therefore, seeking profit maximization and market domination, the surveillance capitalist's WhatsApp and Meta claim users' data as a commodity that can be collected, mined and sold. This not only invades the privacy of its users but also institutionalizes inequality of knowledge and power.

References:

Doffman, Z. (2021, January 6). WhatsApp Beaten By Apple’s New iMessage Privacy Update. Forbes.

Harcourt, B. E. (2015). Exposed: Desire and Disobedience in the Digital Age. Harvard University Press.

Wikipedia contributors. (2022, June 13). WhatsApp. Wikipedia.

Statista. (2022, July 27). Meta: advertising revenue worldwide 2009-2021.

TechTarget. (2013, October 25). privacy policy. WhatIs.Com.

The Economist. (2017, May 11). The world’s most valuable resource is no longer oil, but data.

Zaeem, R. N., & Barber, K. S. (2021). The Effect of the GDPR on Privacy Policies. ACM Transactions on Management Information Systems, 12(1), 1–20.

Zuboff, S. (2019). The age of surveillance capitalism. The fight for the future at the new frontier of power. Profile Books.