Will AI save us from fake news?

Will AI ever be able to regulate the distribution of ‘fake news’ on social media? Probably not anytime soon. Here’s why: disinformation has always found a way to get into our public sphere. May it be in digital media platforms, traditional news outlets, in the printing press, or in the forms of rumors and speculations.

History shows that it is impossible to fully stop the spread of ‘fake news’ be it satire about Medieval lords, World War II propaganda pieces, or a Trump Twitter scandal. The only difference between these events is that 'modern problems require modern solutions'. Nowadays, we have fancy technological tools, like AI, that are supposed to help us out. However, that does not necessarily bring us any closer to solving ‘fake news’, even if algorithms come into the picture. On the contrary, in this day and age, it is crucial that we remain critical about current methods of ‘fake news’ detection.

We were never taught to detect 'fake news'

There is a problem with how digital media allows for innovative ways for misinformation to spread. Technological progress, along with the constant streams of luring, entertaining, rapid, and always new information, has made us into news-consuming addicts. I have never met a person who has not obtained some sort of news through online means. Have you?

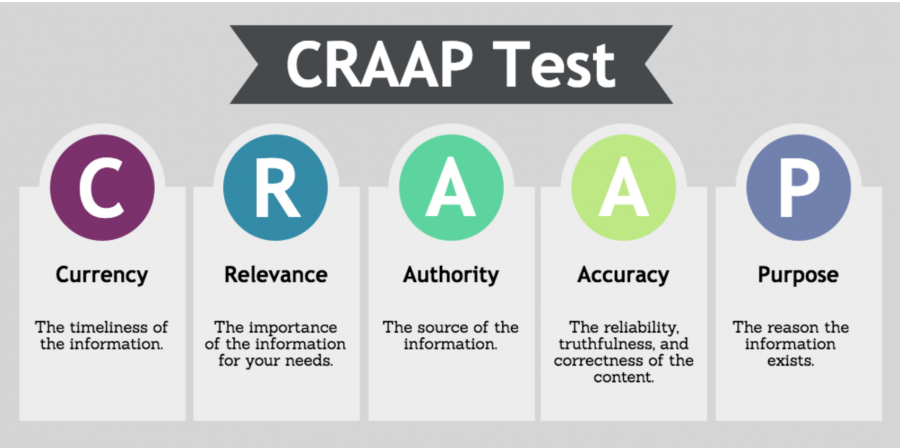

Constant streams of information are coming our way on a daily basis. No one was ready to face the responsibility of having access to so much information, nor the ability to filter it. We are like naive children learning from their own mistakes. Everyone is making teeny tiny steps trying to gain confidence in finding credible sources and personalized strategies of detecting something we can trust again.

One of the existing methods to detect false information on a user experience level.

Social media platforms have experienced immense growth. The ways of moderating this scope have exhausted themselves, the community-level tracking does not work as it did twenty years ago (Gillepsie, 2020). The influence of platforms content has also blown up, extending the consequences of online harms beyond the sites where they occur, "catalyzed by Gamergate, Myanmar, revenge porn, the 2016 U.S. presidential election, Alex Jones, and Christchurch" (Gillepsie, 2020).

The ultimate solution promised by big tech giants became AI. This promise reflects the Californian Ideology of Silicon Valley: social media companies treat spreading 'fake news' as a technological issue, offering technological solutions (Geiger, 2016).

Is AI going to save us from misinformation?

New digital ways of spreading misinformation straight out of a sci-fi novel require new innovative ways to counter them and AI is here to help: AI is scalable, making it the most low-cost, low-effort, and long-term solution offered by social media engines to save our timelines from misinformation hoaxes. However, the technology has not been completely successful so far.

The recent developments have shown that to achieve greater detection of false information, tech industries combine “natural language processing, machine learning and network analysis” (Lee & Fung, 2021). What it means is that first the relevancy between title and content would be checked, and if it goes through, the author’s authenticity follows. Then, the machine learning algorithms come into the picture to do the dirty filtering work (Islam, et al, 2021).

As great as this initiative is, one should be critical of its reliability. Recognizing whether something is untruthful or not requires a fundamental knowledge of politics, society, and culture as well as a sensitivity towards manipulative content. Interpreting a piece of news as ‘fake’ also depends on one’s political views; ‘one’ here being the algorithms’ developers.

In itself, AI is not that smart: it only becomes smart if it is trained with data. And in that sense, it only recognizes what has been indexed as fake news before.

The limits of size come into the picture when it comes to content moderation by AI. The size can be changed, but it would require a "profound act of counter capitalist imagination to find new ways to fit new ML techniques with new forms of governance—not as a replacement for repetitive human judgments, but to enhance the intelligence of the moderators, and the user communities, that must undertake this task" (Gillepsie, 2020). However, that would require a major shift in the current platform systems.

AI vs. censorship

The key concern is the space this new development opens in news censorship. Nowadays, social media platforms embrace their authority to silence particular opinions or actors. This is most noticeable when political crises are approaching. In this way, it becomes easier to exclude certain narratives from our social media feed by blaming it on AI. This is a tool that can be critical when it comes to places with fragile political climates.

This issue becomes even more evident when we look at the countries with stricter censorship of online and offline media sources. Many of the technological solutions have arguably taken us closer to the concept of ban-opticon. This is to say, ensuring individual security is used as a primary rhetorical reason to govern information and implement new control measures (Bigo, 2008).

The idea that everything should be circulating without interference is problematic as it would destroy democracies. To make it clear, we cannot state that all interventions are censorship. What is censorship and what not thus cannot be solely defined in relation to the fact of the intervention. In order to figure it out, the general social and political context should be taken into account. The key concern is the space this new development opens in news censorship, using AI as an excuse to get rid of unfavorable information.

Can fake news detection be any harder?

Another issue is that some topics would be better detected than others depending on the amount of data and media coverage available. With the emergence of a new hot matter, it would take time to learn how to detect false narratives while they effectively make their way into our timelines.

In addition, a lot of information nowadays is spread through private messengers and chats unmoderated by the platform, such as Telegram. While AI can offer solutions for social media such as Facebook, a lot of ‘fake news’ exchanges still remain untraceable.

What can we do?

Our society consists of human guinea pigs. In other words, we are the first generation of fervent internet users who were neither taught nor warned of the cruel disinformation spikes awaiting us online. The best we can do is to find our ways through ‘fake news’ and hope that future generations will learn from our mistakes. When it comes to the education of youth, all of us will certainly put more stress on media literacy to make sure the future generations don’t fall prey to false content.

The ultimate solution as of now is to take the best from both worlds and combine AI and human team efforts in detecting ‘fake news’. However, even in this scenario, there are some cases in which false information is nearly impossible to detect. For instance, when we talk about partial misattribution or misinterpretation of the minor details hidden in the news piece, or when deep fakes with incredibly realistic and believable yet false footage support political or societal claims.

Deep fake example.

The problem of ‘fake news’ evolution, in fact, is so deeply rooted that issues come at a much greater speed than their solutions. The concern is drastic that if one truly wants to stay away from disinformation, they should probably abstain from using any type of media overall. Can one fully get rid of ‘fake news’? Just as history has already shown us, it would be pretty hard to get rid of every existing creative and novel kind of disinformation altogether. The point is, white attempts to decrease the spread of ‘fake news’ should still be encouraged, they might come with the risks of censorship and control and do not guarantee a 100% accuracy of what is being filtered.

We can, however, do what is up to us. Learning to become critical democratic citizens can be an ecological individual-level solution.

References

Bigo, D. (2008). Globalized (In)Security: The field and the Ban-Opticon. In D. Bigo, & A. Tsoukala (Eds.), Terror, Insecurity and Liberty. Illiberal practices of liberal regimes after 9/11 (pp. 10 - 48). Abingdon: Routledge.

Geiger, SR (2016) Bot-based collective blocklists in twitter: The counterpublic moderation of harassment in a networked public space. Information, Communication & Society, 19 (6): 787–803.

Gillepsie, T. (2020). Content moderation, AI, and the question of scale. Big Data & Society, July–December: 1–5.

Islam, N., Shaikh, A., Qaiser, A., Asiri, Y., Almakdi, S., Sulaiman, A., Maozzam, V., Babar, S. A. (2021). Ternion: An Autonomous Model for Fake News Detection. MDPI.

Lee, S. F., Fung, B. C. M. (2021). Artificial intelligence may not actually be the solution for stopping the spread of fake news. The Conversation, November 28, 2021